Smart Alex

Alex was aptly named because she’s, like, super smart. She likes teaching people, and her hobby is posing people questions so that she can explain the answers to them. Alex appears at the end of each chapter of Discovering Statistics Using JASP to pose you some questions and give you tasks to help you to practice your data analysis skills. This page contains her answers to those questions.

Chapter 1

Task 1.1

What are (broadly speaking) the five stages of the research process?

- Generating a research question: through an initial observation (hopefully backed up by some data).

- Generate a theory to explain your initial observation.

- Generate hypotheses: break your theory down into a set of testable predictions.

- Collect data to test the theory: decide on what variables you need to measure to test your predictions and how best to measure or manipulate those variables.

- Analyse the data: look at the data visually and by fitting a statistical model to see if it supports your predictions (and therefore your theory). At this point you should return to your theory and revise it if necessary.

Task 1.2

What is the fundamental difference between experimental and correlational research?

In a word, causality. In experimental research we manipulate a variable (predictor, independent variable) to see what effect it has on another variable (outcome, dependent variable). This manipulation, if done properly, allows us to compare situations where the causal factor is present to situations where it is absent. Therefore, if there are differences between these situations, we can attribute cause to the variable that we manipulated. In correlational research, we measure things that naturally occur and so we cannot attribute cause but instead look at natural covariation between variables.

Task 1.3

What is the level of measurement of the following variables?

- The number of downloads of different bands’ songs on iTunes:

- This is a discrete ratio measure. It is discrete because you can download only whole songs, and it is ratio because it has a true and meaningful zero (no downloads at all).

- The names of the bands downloaded.

- This is a nominal variable. Bands can be identified by their name, but the names have no meaningful order. The fact that Norwegian black metal band 1349 called themselves 1349 does not make them better than British boy-band has-beens 911; the fact that 911 were a bunch of talentless idiots does, though.

- Their positions in the download chart.

- This is an ordinal variable. We know that the band at number 1 sold more than the band at number 2 or 3 (and so on) but we don’t know how many more downloads they had. So, this variable tells us the order of magnitude of downloads, but doesn’t tell us how many downloads there actually were.

- The money earned by the bands from the downloads.

- This variable is continuous and ratio. It is continuous because money (pounds, dollars, euros or whatever) can be broken down into very small amounts (you can earn fractions of euros even though there may not be an actual coin to represent these fractions).

- The weight of drugs bought by the band with their royalties.

- This variable is continuous and ratio. If the drummer buys 100 g of cocaine and the singer buys 1 kg, then the singer has 10 times as much.

- The type of drugs bought by the band with their royalties.

- This variable is categorical and nominal: the name of the drug tells us something meaningful (crack, cannabis, amphetamine, etc.) but has no meaningful order.

- The phone numbers that the bands obtained because of their fame.

- This variable is categorical and nominal too: the phone numbers have no meaningful order; they might as well be letters. A bigger phone number did not mean that it was given by a better person.

- The gender of the people giving the bands their phone numbers.

- This variable is categorical: the people dishing out their phone numbers could fall into one of several categories based on how they self-identify when asked about their gender (their gender identity could be fluid). Taking a very simplistic view of gender, the variable might contain categories of male, female, and non-binary.

- This variable is categorical: the people dishing out their phone numbers could fall into one of several categories based on how they self-identify when asked about their gender (their gender identity could be fluid). Taking a very simplistic view of gender, the variable might contain categories of male, female, and non-binary.

- The instruments played by the band members.

- This variable is categorical and nominal too: the instruments have no meaningful order but their names tell us something useful (guitar, bass, drums, etc.).

- The time they had spent learning to play their instruments.

- This is a continuous and ratio variable. The amount of time could be split into infinitely small divisions (nanoseconds even) and there is a meaningful true zero (no time spent learning your instrument means that, like 911, you can’t play at all).

Task 1.4

Say I own 857 CDs. My friend has written a computer program that uses a webcam to scan my shelves in my house where I keep my CDs and measure how many I have. His program says that I have 863 CDs. Define measurement error. What is the measurement error in my friend’s CD counting device?

Measurement error is the difference between the true value of something and the numbers used to represent that value. In this trivial example, the measurement error is 6 CDs. In this example we know the true value of what we’re measuring; usually we don’t have this information, so we have to estimate this error rather than knowing its actual value.

Task 1.5

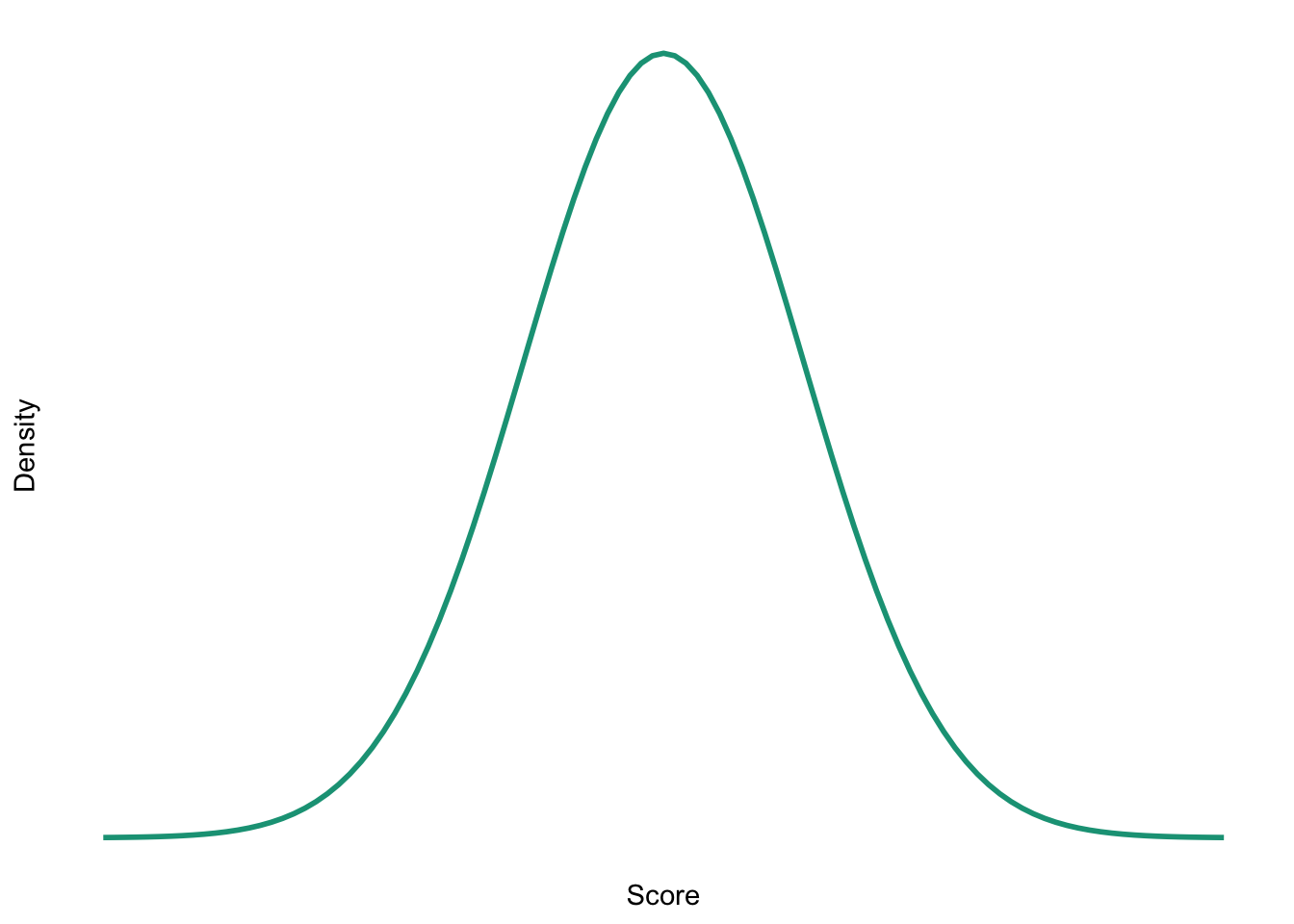

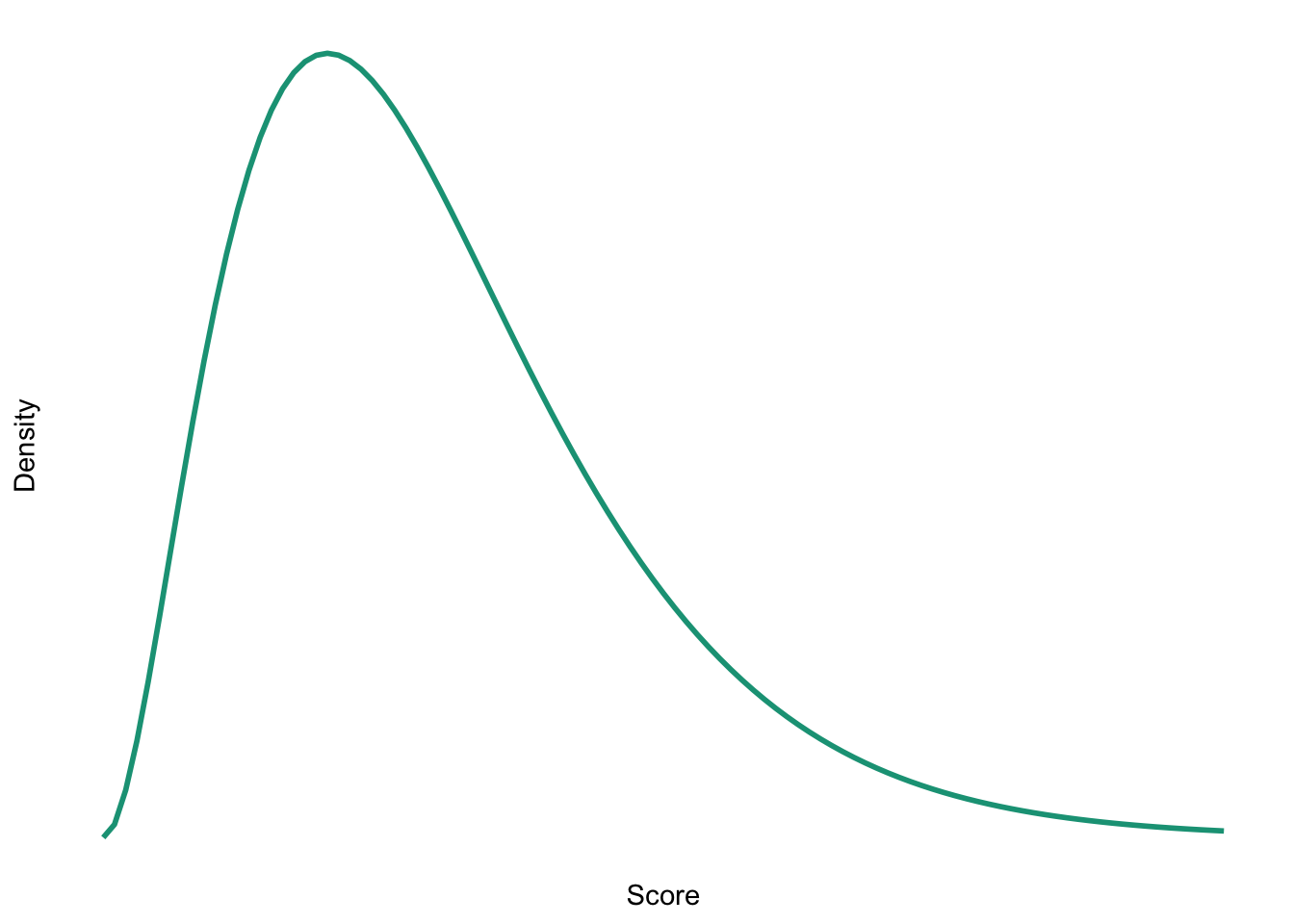

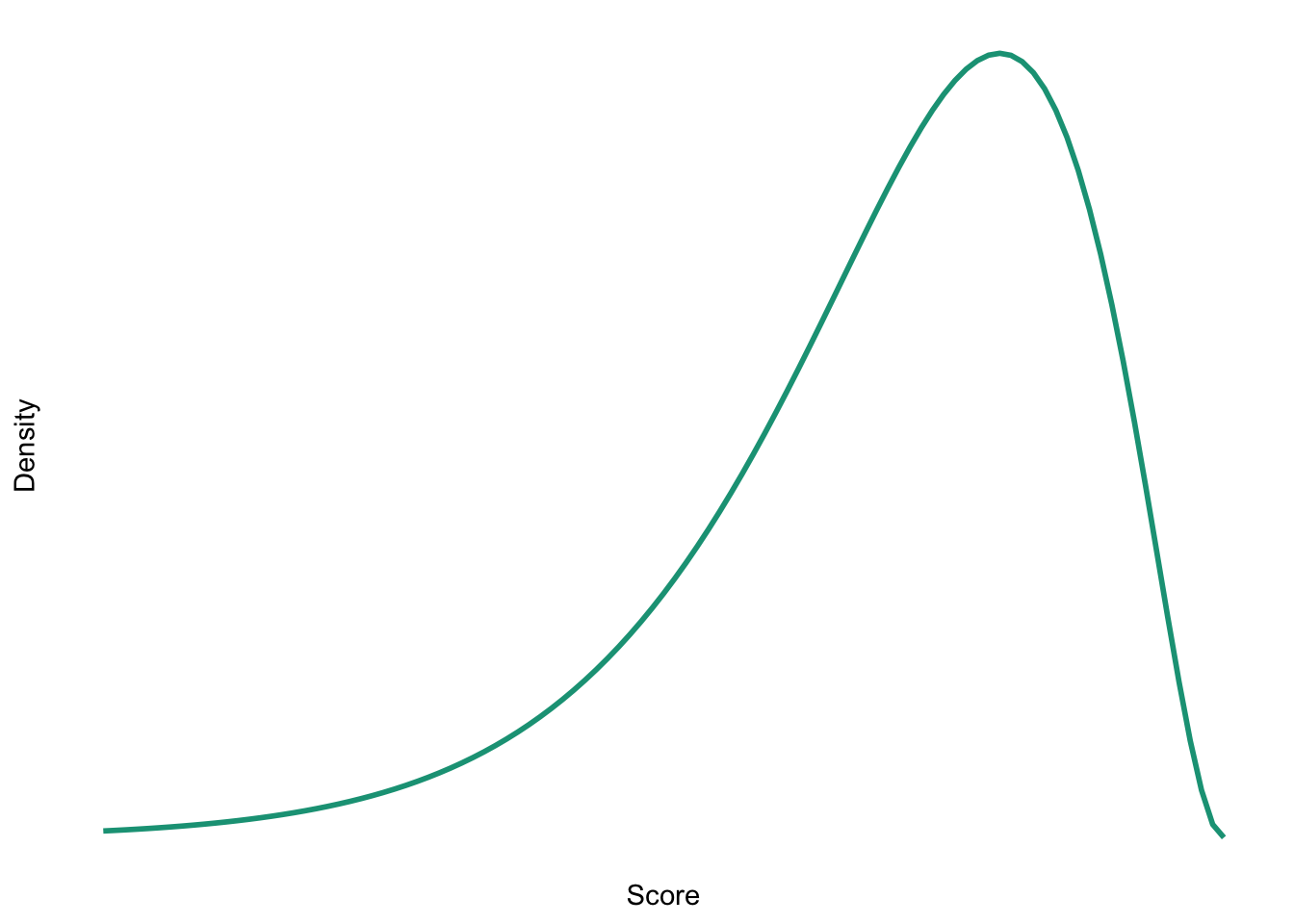

Sketch the shape of a normal distribution, a positively skewed distribution and a negatively skewed distribution.

Normal

Positive skew

Negative skew

Task 1.6

In 2011 I got married and we went to Disney Florida for our honeymoon. We bought some bride and groom Mickey Mouse hats and wore them around the parks. The staff at Disney are really nice and upon seeing our hats would say ‘congratulations’ to us. We counted how many times people said congratulations over 7 days of the honeymoon: 5, 13, 7, 14, 11, 9, 17. Calculate the mean, median, sum of squares, variance and standard deviation of these data.

First compute the mean:

\[ \begin{aligned} \overline{X} &= \frac{\sum_{i=1}^{n} x_i}{n} \\ &= \frac{5+13+7+14+11+9+17}{7} \\ &= \frac{76}{7} \\ &= 10.86 \end{aligned} \]

To calculate the median, first let’s arrange the scores in ascending order: 5, 7, 9, 11, 13, 14, 17. The median will be the (n + 1)/2th score. There are 7 scores, so this will be the 8/2 = 4th. The 4th score in our ordered list is 11.

To calculate the sum of squares, first take the mean from each score, then square this difference, finally, add up these squared values:

| Score | Error (score - mean) | Error squared | |

|---|---|---|---|

| 5 | -5.86 | 34.34 | |

| 13 | 2.14 | 4.58 | |

| 7 | -3.86 | 14.90 | |

| 14 | 3.14 | 9.86 | |

| 11 | 0.14 | 0.02 | |

| 9 | -1.86 | 3.46 | |

| 17 | 6.14 | 37.70 | |

| Total | — | — | 104.86 |

So, the sum of squared errors is:

\[ \begin{aligned} \text{SS} &= 34.34 + 4.58 + 14.90 + 9.86 + 0.02 + 3.46 + 37.70 \\ &= 104.86 \\ \end{aligned} \]

The variance is the sum of squared errors divided by the degrees of freedom:

\[ \begin{aligned} s^2 &= \frac{SS}{N - 1} \\ &= \frac{104.86}{6} \\ &= 17.48 \end{aligned} \]

The standard deviation is the square root of the variance:

\[ \begin{aligned} s &= \sqrt{s^2} \\ &= \sqrt{17.48} \\ &= 4.18 \end{aligned} \]

Task 1.7

In this chapter we used an example of the time taken for 21 heavy smokers to fall off a treadmill at the fastest setting (18, 16, 18, 24, 23, 22, 22, 23, 26, 29, 32, 34, 34, 36, 36, 43, 42, 49, 46, 46, 57). Calculate the sums of squares, variance and standard deviation of these data.

To calculate the sum of squares, take the mean from each value, then square this difference. Finally, add up these squared values (the values in the final column). The sum of squared errors is a massive 2685.24.

| Score | Mean | Difference | Difference squared | |

|---|---|---|---|---|

| 18 | 32.19 | -14.19 | 201.356 | |

| 16 | 32.19 | -16.19 | 262.116 | |

| 18 | 32.19 | -14.19 | 201.356 | |

| 24 | 32.19 | -8.19 | 67.076 | |

| 23 | 32.19 | -9.19 | 84.456 | |

| 22 | 32.19 | -10.19 | 103.836 | |

| 22 | 32.19 | -10.19 | 103.836 | |

| 23 | 32.19 | -9.19 | 84.456 | |

| 26 | 32.19 | -6.19 | 38.316 | |

| 29 | 32.19 | -3.19 | 10.176 | |

| 32 | 32.19 | -0.19 | 0.036 | |

| 34 | 32.19 | 1.81 | 3.276 | |

| 34 | 32.19 | 1.81 | 3.276 | |

| 36 | 32.19 | 3.81 | 14.516 | |

| 36 | 32.19 | 3.81 | 14.516 | |

| 43 | 32.19 | 10.81 | 116.856 | |

| 42 | 32.19 | 9.81 | 96.236 | |

| 49 | 32.19 | 16.81 | 282.576 | |

| 46 | 32.19 | 13.81 | 190.716 | |

| 46 | 32.19 | 13.81 | 190.716 | |

| 57 | 32.19 | 24.81 | 615.536 | |

| Total | — | — | — | 2685.236 |

The variance is the sum of squared errors divided by the degrees of freedom (\(N-1\)). There were 21 scores and so the degrees of freedom were 20. The variance is, therefore:

\[ \begin{aligned} s^2 &= \frac{SS}{N - 1} \\ &= \frac{2685.24}{20} \\ &= 134.26 \end{aligned} \]

The standard deviation is the square root of the variance:

\[ \begin{aligned} s &= \sqrt{s^2} \\ &= \sqrt{134.26} \\ &= 11.59 \end{aligned} \]

Task 1.8

Sports scientists sometimes talk of a ‘red zone’, which is a period during which players in a team are more likely to pick up injuries because they are fatigued. When a player hits the red zone it is a good idea to rest them for a game or two. At a prominent London football club that I support, they measured how many consecutive games the 11 first team players could manage before hitting the red zone: 10, 16, 8, 9, 6, 8, 9, 11, 12, 19, 5. Calculate the mean, standard deviation, median, range and interquartile range.

First we need to compute the mean:

\[ \begin{aligned} \overline{X} &= \frac{\sum_{i=1}^{n} x_i}{n} \\ &= \frac{10+16+8+9+6+8+9+11+12+19+5}{11} \\ &= \frac{113}{11} \\ &= 10.27 \end{aligned} \]

Then the standard deviation, which we do as follows:

| Score | Error (score - mean) | Error squared | |

|---|---|---|---|

| 10 | -0.27 | 0.07 | |

| 16 | 5.73 | 32.83 | |

| 8 | -2.27 | 5.15 | |

| 9 | -1.27 | 1.61 | |

| 6 | -4.27 | 18.23 | |

| 8 | -2.27 | 5.15 | |

| 9 | -1.27 | 1.61 | |

| 11 | 0.73 | 0.53 | |

| 12 | 1.73 | 2.99 | |

| 19 | 8.73 | 76.21 | |

| 5 | -5.27 | 27.77 | |

| Total | — | — | 172.15 |

So, the sum of squared errors is:

\[ \begin{aligned} \text{SS} &= 0.07 + 32.83 + 5.15 + 1.61 + 18.23 + 5.15 + 1.61 + 0.53 + 2.99 + 76.21 + 27.77 \\ &= 172.15 \\ \end{aligned} \]

The variance is the sum of squared errors divided by the degrees of freedom:

\[ \begin{aligned} s^2 &= \frac{SS}{N - 1} \\ &= \frac{172.15}{10} \\ &= 17.22 \end{aligned} \]

The standard deviation is the square root of the variance:

\[ \begin{aligned} s &= \sqrt{s^2} \\ &= \sqrt{17.22} \\ &= 4.15 \end{aligned} \]

- To calculate the median, range and interquartile range, first let’s arrange the scores in ascending order: 5, 6, 8, 8, 9, 9, 10, 11, 12, 16, 19. The median: The median will be the (\(n + 1\))/2th score. There are 11 scores, so this will be the 12/2 = 6th. The 6th score in our ordered list is 9 games. Therefore, the median number of games is 9.

- The lower quartile: This is the median of the lower half of scores. If we split the data at 9 (the 6th score), there are 5 scores below this value. The median of 5 = 6/2 = 3rd score. The 3rd score is 8, the lower quartile is therefore 8 games.

- The upper quartile: This is the median of the upper half of scores. If we split the data at 9 again (not including this score), there are 5 scores above this value. The median of 5 = 6/2 = 3rd score above the median. The 3rd score above the median is 12; the upper quartile is therefore 12 games.

- The range: This is the highest score (19) minus the lowest (5), i.e. 14 games.

- The interquartile range: This is the difference between the upper and lower quartile: 12−8 = 4 games.

Task 1.9

Celebrities always seem to be getting divorced. The (approximate) length of some celebrity marriages in days are: 240 (J-Lo and Cris Judd), 144 (Charlie Sheen and Donna Peele), 143 (Pamela Anderson and Kid Rock), 72 (Kim Kardashian, if you can call her a celebrity), 30 (Drew Barrymore and Jeremy Thomas), 26 (Axl Rose and Erin Everly), 2 (Britney Spears and Jason Alexander), 150 (Drew Barrymore again, but this time with Tom Green), 14 (Eddie Murphy and Tracy Edmonds), 150 (Renee Zellweger and Kenny Chesney), 1657 (Jennifer Aniston and Brad Pitt). Compute the mean, median, standard deviation, range and interquartile range for these lengths of celebrity marriages.

First we need to compute the mean:

\[ \begin{aligned} \overline{X} &= \frac{\sum_{i=1}^{n} x_i}{n} \\ &= \frac{240+144+143+72+30+26+2+150+14+150+1657}{11} \\ &= \frac{2628}{11} \\ &= 238.91 \end{aligned} \]

Then the standard deviation, which we do as follows:

| Score | Error (score - mean) | Error squared | |

|---|---|---|---|

| 240 | 1.09 | 1.19 | |

| 144 | -94.91 | 9007.91 | |

| 143 | -95.91 | 9198.73 | |

| 72 | -166.91 | 27858.95 | |

| 30 | -208.91 | 43643.39 | |

| 26 | -212.91 | 45330.67 | |

| 2 | -236.91 | 56126.35 | |

| 150 | -88.91 | 7904.99 | |

| 14 | -224.91 | 50584.51 | |

| 150 | -88.91 | 7904.99 | |

| 1657 | 1418.09 | 2010979.25 | |

| Total | — | — | 2268541 |

So, the sum of squared errors is the sum of the final column. The variance is the sum of squared errors divided by the degrees of freedom:

\[ \begin{aligned} s^2 &= \frac{SS}{N - 1} \\ &= \frac{2268541}{10} \\ &= 226854.1 \end{aligned} \]

The standard deviation is the square root of the variance:

\[ \begin{aligned} s &= \sqrt{s^2} \\ &= \sqrt{226854.1} \\ &= 476.29 \end{aligned} \]

- To calculate the median, range and interquartile range, first let’s arrange the scores in ascending order: 2, 14, 26, 30, 72, 143, 144, 150, 150, 240, 1657. The median: The median will be the (n + 1)/2th score. There are 11 scores, so this will be the 12/2 = 6th. The 6th score in our ordered list is 143. The median length of these celebrity marriages is therefore 143 days.

- The lower quartile: This is the median of the lower half of scores. If we split the data at 143 (the 6th score), there are 5 scores below this value. The median of 5 = 6/2 = 3rd score. The 3rd score is 26, the lower quartile is therefore 26 days.

- The upper quartile: This is the median of the upper half of scores. If we split the data at 143 again (not including this score), there are 5 scores above this value. The median of 5 = 6/2 = 3rd score above the median. The 3rd score above the median is 150; the upper quartile is therefore 150 days.

- The range: This is the highest score (1657) minus the lowest (2), i.e. 1655 days.

- The interquartile range: This is the difference between the upper and lower quartile: 150−26 = 124 days.

Task 1.10

Repeat Task 9 but excluding Jennifer Anniston and Brad Pitt’s marriage. How does this affect the mean, median, range, interquartile range, and standard deviation? What do the differences in values between Tasks 9 and 10 tell us about the influence of unusual scores on these measures?

First let’s compute the new mean:

\[ \begin{aligned} \overline{X} &= \frac{\sum_{i=1}^{n} x_i}{n} \\ &= \frac{240+144+143+72+30+26+2+150+14+150}{11} \\ &= \frac{971}{11} \\ &= 97.1 \end{aligned} \]

The mean length of celebrity marriages is now 97.1 days compared to 238.91 days when Jennifer Aniston and Brad Pitt’s marriage was included. This demonstrates that the mean is greatly influenced by extreme scores.

Let’s now calculate the standard deviation excluding Jennifer Aniston and Brad Pitt’s marriage:

| Score | Error (score - mean) | Error squared | |

|---|---|---|---|

| 240 | 142.9 | 20420.41 | |

| 144 | 46.9 | 2199.61 | |

| 143 | 45.9 | 2106.81 | |

| 72 | -25.1 | 630.01 | |

| 30 | -67.1 | 4502.41 | |

| 26 | -71.1 | 5055.21 | |

| 2 | -95.1 | 9044.01 | |

| 150 | 52.9 | 2798.41 | |

| 14 | -83.1 | 6905.61 | |

| 150 | 52.9 | 2798.41 | |

| Total | — | — | 56460.9 |

So, the sum of squared errors is:

\[ \begin{aligned} \text{SS} &= 20420.41 + 2199.61 + 2106.81 + 630.01 + 4502.41 + 5055.21 + 9044.01 + 2798.41 + 6905.61 + 2798.41 \\ &= 56460.90 \\ \end{aligned} \]

The variance is the sum of squared errors divided by the degrees of freedom:

\[ \begin{aligned} s^2 &= \frac{SS}{N - 1} \\ &= \frac{56460.90}{9} \\ &= 6273.43 \end{aligned} \]

The standard deviation is the square root of the variance:

\[ \begin{aligned} s &= \sqrt{s^2} \\ &= \sqrt{6273.43} \\ &= 79.21 \end{aligned} \]

From these calculations we can see that the variance and standard deviation, like the mean, are both greatly influenced by extreme scores. When Jennifer Aniston and Brad Pitt’s marriage was included in the calculations (see Smart Alex Task 9), the variance and standard deviation were much larger, i.e. 226854.09 and 476.29 respectively.

- To calculate the median, range and interquartile range, first, let’s again arrange the scores in ascending order but this time excluding Jennifer Aniston and Brad Pitt’s marriage: 2, 14, 26, 30, 72, 143, 144, 150, 150, 240.

- The median: The median will be the (n + 1)/2 score. There are now 10 scores, so this will be the 11/2 = 5.5th. Therefore, we take the average of the 5th score and the 6th score. The 5th score is 72, and the 6th is 143; the median is therefore 107.5 days.

- The lower quartile: This is the median of the lower half of scores. If we split the data at 107.5 (this score is not in the data set), there are 5 scores below this value. The median of 5 = 6/2 = 3rd score. The 3rd score is 26; the lower quartile is therefore 26 days.

- The upper quartile: This is the median of the upper half of scores. If we split the data at 107.5 (this score is not actually present in the data set), there are 5 scores above this value. The median of 5 = 6/2 = 3rd score above the median. The 3rd score above the median is 150; the upper quartile is therefore 150 days.

- The range: This is the highest score (240) minus the lowest (2), i.e. 238 days. You’ll notice that without the extreme score the range drops dramatically from 1655 to 238 – less than half the size.

- The interquartile range: This is the difference between the upper and lower quartile: 150 − 26 = 124 days of marriage. This is the same as the value we got when Jennifer Aniston and Brad Pitt’s marriage was included. This demonstrates the advantage of the interquartile range over the range, i.e. it isn’t affected by extreme scores at either end of the distribution

Chapter 2

Task 2.1

Why do we use samples?

We are usually interested in populations, but because we cannot collect data from every human being (or whatever) in the population, we collect data from a small subset of the population (known as a sample) and use these data to infer things about the population as a whole.

Task 2.2

What is the mean and how do we tell if it’s representative of our data?

The mean is a simple statistical model of the centre of a distribution of scores. A hypothetical estimate of the ‘typical’ score. We use the variance, or standard deviation, to tell us whether it is representative of our data. The standard deviation is a measure of how much error there is associated with the mean: a small standard deviation indicates that the mean is a good representation of our data.

Task 2.3

What’s the difference between the standard deviation and the standard error?

The standard deviation tells us how much observations in our sample differ from the mean value within our sample. The standard error tells us not about how the sample mean represents the sample itself, but how well the sample mean represents the population mean. The standard error is the standard deviation of the sampling distribution of a statistic. For a given statistic (e.g. the mean) it tells us how much variability there is in this statistic across samples from the same population. Large values, therefore, indicate that a statistic from a given sample may not be an accurate reflection of the population from which the sample came.

Task 2.4

In Chapter 1 we used an example of the time in seconds taken for 21 heavy smokers to fall off a treadmill at the fastest setting (18, 16, 18, 24, 23, 22, 22, 23, 26, 29, 32, 34, 34, 36, 36, 43, 42, 49, 46, 46, 57). Calculate standard error and 95% confidence interval for these data.

If you did the tasks in Chapter 1, you’ll know that the mean is 32.19 seconds:

\[ \begin{aligned} \overline{X} &= \frac{\sum_{i=1}^{n} x_i}{n} \\ &= \frac{16+(2\times18)+(2\times22)+(2\times23)+24+26+29+32+(2\times34)+(2\times36)+42+43+(2\times46)+49+57}{21} \\ &= \frac{676}{21} \\ &= 32.19 \end{aligned} \]

We also worked out that the sum of squared errors was 2685.24; the variance was 2685.24/20 = 134.26; the standard deviation is the square root of the variance, so was \(\sqrt(134.26)\) = 11.59. The standard error will be:

\[ SE = \frac{s}{\sqrt{N}} = \frac{11.59}{\sqrt{21}} = 2.53 \]

The sample is small, so to calculate the confidence interval we need to find the appropriate value of t. First we need to calculate the degrees of freedom, \(N − 1\). With 21 data points, the degrees of freedom are 20. For a 95% confidence interval we can look up the value in the column labelled ‘Two-Tailed Test’, ‘0.05’ in the table of critical values of the t-distribution (Appendix). The corresponding value is 2.09. The confidence intervals is, therefore, given by:

\[ \begin{aligned} \text{95% CI}_\text{lower boundary} &= \overline{X}-(2.09 \times SE)) \\ &= 32.19 – (2.09 × 2.53) \\ & = 26.90 \\ \text{95% CI}_\text{upper boundary} &= \overline{X}+(2.09 \times SE) \\ &= 32.19 + (2.09 × 2.53) \\ &= 37.48 \end{aligned} \]

Task 2.5

What do the sum of squares, variance and standard deviation represent? How do they differ?

All of these measures tell us something about how well the mean fits the observed sample data. Large values (relative to the scale of measurement) suggest the mean is a poor fit of the observed scores, and small values suggest a good fit. They are also, therefore, measures of dispersion, with large values indicating a spread-out distribution of scores and small values showing a more tightly packed distribution. These measures all represent the same thing, but differ in how they express it. The sum of squared errors is a ‘total’ and is, therefore, affected by the number of data points. The variance is the ‘average’ variability but in units squared. The standard deviation is the average variation but converted back to the original units of measurement. As such, the size of the standard deviation can be compared to the mean (because they are in the same units of measurement).

Task 2.6

What is a test statistic and what does it tell us?

A test statistic is a statistic for which we know how frequently different values occur. The observed value of such a statistic is typically used to test hypotheses, or to establish whether a model is a reasonable representation of what’s happening in the population.

Task 2.7

What are Type I and Type II errors?

A Type I error occurs when we believe that there is a genuine effect in our population, when in fact there isn’t. A Type II error occurs when we believe that there is no effect in the population when, in reality, there is.

Task 2.8

What is statistical power?

Power is the ability of a test to detect an effect of a particular size (a value of 0.8 is a good level to aim for).

Task 2.9

Figure 2.16 shows two experiments that looked at the effect of singing versus conversation on how much time a woman would spend with a man. In both experiments the means were 10 (singing) and 12 (conversation), the standard deviations in all groups were 3, but the group sizes were 10 per group in the first experiment and 100 per group in the second. Compute the values of the confidence intervals displayed in the Figure.

Experiment 1:

In both groups, because they have a standard deviation of 3 and a sample size of 10, the standard error will be:

\[ SE = \frac{s}{\sqrt{N}} = \frac{3}{\sqrt{10}} = 0.95 \]

The sample is small, so to calculate the confidence interval we need to find the appropriate value of t. First we need to calculate the degrees of freedom, \(N − 1\). With 10 data points, the degrees of freedom are 9. For a 95% confidence interval we can look up the value in the column labelled ‘Two-Tailed Test’, ‘0.05’ in the table of critical values of the t-distribution (Appendix). The corresponding value is 2.26. The confidence interval for the singing group is, therefore, given by:

\[ \begin{aligned} \text{95% CI}_\text{lower boundary} &= \overline{X}-(2.26 \times SE) \\ &= 10 – (2.26 × 0.95) \\ & = 7.85 \\ \text{95% CI}_\text{upper boundary} &= \overline{X}+(2.26 \times SE) \\ &= 10 + (2.26 × 0.95) \\ &= 12.15 \end{aligned} \]

For the conversation group:

\[ \begin{aligned} \text{95% CI}_\text{lower boundary} &= \overline{X}-(2.26 \times SE) \\ &= 12 – (2.26 × 0.95) \\ & = 9.85 \\ \text{95% CI}_\text{upper boundary} &= \overline{X}+(2.26 \times SE) \\ &= 12 + (2.26 × 0.95) \\ &= 14.15 \end{aligned} \]

Experiment 2

In both groups, because they have a standard deviation of 3 and a sample size of 100, the standard error will be:

\[ SE = \frac{s}{\sqrt{N}} = \frac{3}{\sqrt{100}} = 0.3 \]

The sample is large, so to calculate the confidence interval we need to find the appropriate value of z. For a 95% confidence interval we should look up the value of 0.025 in the column labelled Smaller Portion in the table of the standard normal distribution (Appendix). The corresponding value is 1.96. The confidence interval for the singing group is, therefore, given by:

\[ \begin{aligned} \text{95% CI}_\text{lower boundary} &= \overline{X}-(1.96 \times SE) \\ &= 10 – (1.96 × 0.3) \\ & = 9.41 \\ \text{95% CI}_\text{upper boundary} &= \overline{X}+(1.96 \times SE) \\ &= 10 + (1.96 × 0.3) \\ &= 10.59 \end{aligned} \]

For the conversation group:

\[ \begin{aligned} \text{95% CI}_\text{lower boundary} &= \overline{X}-(1.96 \times SE) \\ &= 12 – (1.96 × 0.3) \\ & = 11.41 \\ \text{95% CI}_\text{upper boundary} &= \overline{X}+(1.96 \times SE) \\ &= 12 + (1.96 × 0.3) \\ &= 12.59 \end{aligned} \]

Task 2.10

Figure 2.17 shows a similar study to above, but the means were 10 (singing) and 10.01 (conversation), the standard deviations in both groups were 3, and each group contained 1 million people. Compute the values of the confidence intervals displayed in the figure.

In both groups, because they have a standard deviation of 3 and a sample size of 1,000,000, the standard error will be:

\[ SE = \frac{s}{\sqrt{N}} = \frac{3}{\sqrt{1000000}} = 0.003 \]

The sample is large, so to calculate the confidence interval we need to find the appropriate value of z. For a 95% confidence interval we should look up the value of 0.025 in the column labelled Smaller Portion in the table of the standard normal distribution (Appendix). The corresponding value is 1.96. The confidence interval for the singing group is, therefore, given by:

\[ \begin{aligned} \text{95% CI}_\text{lower boundary} &= \overline{X}-(1.96 \times SE) \\ &= 10 – (1.96 × 0.003) \\ & = 9.99412 \\ \text{95% CI}_\text{upper boundary} &= \overline{X}+(1.96 \times SE) \\ &= 10 + (1.96 × 0.003) \\ &= 10.00588 \end{aligned} \]

For the conversation group:

\[ \begin{aligned} \text{95% CI}_\text{lower boundary} &= \overline{X}-(1.96 \times SE) \\ &= 10.01 – (1.96 × 0.003) \\ & = 10.00412 \\ \text{95% CI}_\text{upper boundary} &= \overline{X}+(1.96 \times SE) \\ &= 10.01 + (1.96 × 0.003) \\ &= 10.01588 \end{aligned} \]

Note: these values will look slightly different than the plot because the exact means were 10.00147 and 10.01006, but we rounded off to 10 and 10.01 to make life a bit easier. If you use these exact values you’d get, for the singing group:

\[ \begin{aligned} \text{95% CI}_\text{lower boundary} &= \overline{X}-(1.96 \times SE) \\ &= 10.01006 – (1.96 × 0.003) \\ & = 9.99559 \\ \text{95% CI}_\text{upper boundary} &= \overline{X}+(1.96 \times SE) \\ &= 10.01006 + (1.96 × 0.003) \\ &= 10.00735 \end{aligned} \]

For the conversation group:

\[ \begin{aligned} \text{95% CI}_\text{lower boundary} &= \overline{X}-(1.96 \times SE) \\ &= 10.01006 – (1.96 × 0.003) \\ & = 10.00418 \\ \text{95% CI}_\text{upper boundary} &= \overline{X}+(1.96 \times SE) \\ &= 10.01006 + (1.96 × 0.003) \\ &= 10.01594 \end{aligned} \]

Task 2.11

In Chapter 1 (Task 8) we looked at an example of how many games it took a sportsperson before they hit the ‘red zone’ Calculate the standard error and confidence interval for those data.

We worked out in Chapter 1 that the mean was 10.27, the standard deviation 4.15, and there were 11 sportspeople in the sample. The standard error will be:

\[ SE = \frac{s}{\sqrt{N}} = \frac{4.15}{\sqrt{11}} = 1.25 \] The sample is small, so to calculate the confidence interval we need to find the appropriate value of t. First we need to calculate the degrees of freedom, \(N − 1\). With 11 data points, the degrees of freedom are 10. For a 95% confidence interval we can look up the value in the column labelled ‘Two-Tailed Test’, ‘.05’ in the table of critical values of the t-distribution (Appendix). The corresponding value is 2.23. The confidence interval is, therefore, given by:

\[ \begin{aligned} \text{95% CI}_\text{lower boundary} &= \overline{X}-(2.23 \times SE) \\ &= 10.27 – (2.23 × 1.25) \\ & = 7.48 \\ \text{95% CI}_\text{upper boundary} &= \overline{X}+(2.23 \times SE) \\ &= 10.27 + (2.23 × 1.25) \\ &= 13.06 \end{aligned} \]

Task 2.12

At a rival club to the one I support, they similarly measured the number of consecutive games it took their players before they reached the red zone. The data are: 6, 17, 7, 3, 8, 9, 4, 13, 11, 14, 7. Calculate the mean, standard deviation, and confidence interval for these data.

First we need to compute the mean: \[ \begin{aligned} \overline{X} &= \frac{\sum_{i=1}^{n} x_i}{n} \\ &= \frac{6+17+7+3+8+9+4+13+11+14+7}{11} \\ &= \frac{99}{11} \\ &= 9.00 \end{aligned} \]

Then the standard deviation, which we do as follows:

| Score | Error (score - mean) | Error squared | |

|---|---|---|---|

| 6 | -3 | 9 | |

| 17 | 8 | 64 | |

| 7 | -2 | 4 | |

| 3 | -6 | 36 | |

| 8 | -1 | 1 | |

| 9 | 0 | 0 | |

| 4 | -5 | 25 | |

| 13 | 4 | 16 | |

| 11 | 2 | 4 | |

| 14 | 5 | 25 | |

| 7 | -2 | 4 | |

| Total | — | — | 188 |

The sum of squared errors is:

\[ \begin{aligned} \text{SS} &= 9 + 64 + 4 + 36 + 1 + 0 + 25 + 16 + 4 + 25 + 4 \\ &= 188 \\ \end{aligned} \]

The variance is the sum of squared errors divided by the degrees of freedom:

\[ \begin{aligned} s^2 &= \frac{SS}{N - 1} \\ &= \frac{188}{10} \\ &= 18.8 \end{aligned} \]

The standard deviation is the square root of the variance:

\[ \begin{aligned} s &= \sqrt{s^2} \\ &= \sqrt{18.8} \\ &= 4.34 \end{aligned} \]

There were 11 sportspeople in the sample, so the standard error will be: \[ SE = \frac{s}{\sqrt{N}} = \frac{4.34}{\sqrt{11}} = 1.31\]

The sample is small, so to calculate the confidence interval we need to find the appropriate value of t. First we need to calculate the degrees of freedom, \(N − 1\). With 11 data points, the degrees of freedom are 10. For a 95% confidence interval we can look up the value in the column labelled ‘Two-Tailed Test’, ‘0.05’ in the table of critical values of the t-distribution (Appendix). The corresponding value is 2.23. The confidence intervals is, therefore, given by:

\[ \begin{aligned} \text{95% CI}_\text{lower boundary} &= \overline{X}-(2.23\times SE)) \\ &= 9 – (2.23 × 1.31) \\ & = 6.08 \\ \text{95% CI}_\text{upper boundary} &= \overline{X}+(2.23\times SE) \\ &= 9 + (2.23 × 1.31) \\ &= 11.92 \end{aligned} \]

Task 2.13

In Chapter 1 (Task 9) we looked at the length in days of 11 celebrity marriages. Here are the approximate lengths in months of nine marriages, one being mine and the others being those of some of my friends and family. In all but two cases the lengths are calculated up to the day I’m writing this, which is 20 June 2023, but the 3- and 111-month durations are marriages that have ended – neither of these is mine, in case you’re wondering: 3, 144, 267, 182, 159, 152, 693, 50, and 111. Calculate the mean, standard deviation and confidence interval for these data.

First we need to compute the mean:

\[ \begin{aligned} \overline{X} &= \frac{\sum_{i=1}^{n} x_i}{n} \\ &= \frac{3 + 144 + 267 + 182 + 159 + 152 + 693 + 50 + 111}{9} \\ &= \frac{1761}{9} \\ &= 195.67 \end{aligned} \]

Compute the standard deviation as follows:

| Score | Error (score - mean) | Error squared | |

|---|---|---|---|

| 3 | -192.67 | 37121.73 | |

| 144 | -51.67 | 2669.79 | |

| 267 | 71.33 | 5087.97 | |

| 182 | -13.67 | 186.87 | |

| 159 | -36.67 | 1344.69 | |

| 152 | -43.67 | 1907.07 | |

| 693 | 497.33 | 247337.13 | |

| 50 | -145.67 | 21219.75 | |

| 111 | -84.67 | 7169.01 | |

| Total | — | — | 324044 |

The sum of squared errors is:

\[ \begin{aligned} \text{SS} &= 37121.73 + 2669.79 + 5087.97 + 186.87 + 1344.69 + 1907.07 + 247337.13 + 21219.75 + 7169.01 \\ &= 324044 \\ \end{aligned} \]

The variance is the sum of squared errors divided by the degrees of freedom:

\[ \begin{aligned} s^2 &= \frac{SS}{N - 1} \\ &= \frac{324044}{8} \\ &= 40505.5 \end{aligned} \] The standard deviation is the square root of the variance:

\[ \begin{aligned} s &= \sqrt{s^2} \\ &= \sqrt{40505.5} \\ &= 201.2598 \end{aligned} \]

The standard error is:

\[ \begin{aligned} SE &= \frac{s}{\sqrt{N}} \\ &= \frac{201.2598}{\sqrt{9}} \\ &= 67.0866 \end{aligned} \]

The sample is small, so to calculate the confidence interval we need to find the appropriate value of t. First we need to calculate the degrees of freedom, \(N − 1\). With 9 data points, the degrees of freedom are 8. For a 95% confidence interval we can look up the value in the column labelled ‘Two-Tailed Test’, ‘0.05’ in the table of critical values of the t-distribution (Appendix). The corresponding value is 2.31. The confidence interval is, therefore, given by:

\[ \begin{aligned} \text{95% CI}_\text{lower boundary} &= \overline{X}-(2.31 \times SE)) \\ &= 195.67 – (2.31 × 67.0866) \\ & = 40.70 \\ \text{95% CI}_\text{upper boundary} &= \overline{X}+(2.31 \times SE) \\ &= 195.67 + (2.31 × 67.0866) \\ &= 350.64 \end{aligned} \]

Chapter 3

Task 3.1

What is an effect size and how is it measured?

An effect size is an objective and standardized measure of the magnitude of an observed effect. Measures include Cohen’s d, the odds ratio and Pearson’s correlations coefficient, r. Cohen’s d, for example, is the difference between two means divided by either the standard deviation of the control group, or by a pooled standard deviation.

Task 3.2

In Chapter 1 (Task 8) we looked at an example of how many games it took a sportsperson before they hit the ‘red zone’, then in Chapter 2 we looked at data from a rival club. Compute and interpret Cohen’s \(\hat{d}\) for the difference in the mean number of games it took players to become fatigued in the two teams mentioned in those tasks.

Cohen’s d is defined as:

\[ \hat{d} = \frac{\bar{X_1}-\bar{X_2}}{s} \]

There isn’t an obvious control group, so let’s use a pooled estimate of the standard deviation:

\[ \begin{aligned} s_p &= \sqrt{\frac{(N_1-1) s_1^2+(N_2-1) s_2^2}{N_1+N_2-2}} \\ &= \sqrt{\frac{(11-1)4.15^2+(11-1)4.34^2}{11+11-2}} \\ &= \sqrt{\frac{360.23}{20}} \\ &= 4.24 \end{aligned} \]

Therefore, Cohen’s \(\hat{d}\) is:

\[ \hat{d} = \frac{10.27-9}{4.24} = 0.30 \]

Therefore, the second team fatigued in fewer matches than the first team by about 1/3 standard deviation. By the benchmarks that we probably shouldn’t use, this is a small to medium effect, but I guess if you’re managing a top-flight sports team, fatiguing 1/3 of a standard deviation faster than one of your opponents could make quite a substantial difference to your performance and team rotation over the season.

Task 3.3

Calculate and interpret Cohen’s \(\hat{d}\) for the difference in the mean duration of the celebrity marriages in Chapter 1 (Task 9) and me and my friend’s marriages (Chapter 2, Task 13).

Cohen’s \(\hat{d}\) is defined as:

\[ \hat{d} = \frac{\bar{X_1}-\bar{X_2}}{s} \]

There isn’t an obvious control group, so let’s use a pooled estimate of the standard deviation:

\[ \begin{aligned} s_p &= \sqrt{\frac{(N_1-1) s_1^2+(N_2-1) s_2^2}{N_1+N_2-2}} \\ &= \sqrt{\frac{(11-1)476.29^2+(9-1)8275.91^2}{11+9-2}} \\ &= \sqrt{\frac{550194093}{18}} \\ &= 5528.68 \end{aligned} \]

Therefore, Cohen’s d is: \[\hat{d} = \frac{5057-238.91}{5528.68} = 0.87\] Therefore, my friend’s marriages are 0.87 standard deviations longer than the sample of celebrities. By the benchmarks that we probably shouldn’t use, this is a large effect.

Task 3.4

What are the problems with null hypothesis significance testing?

- We can’t conclude that an effect is important because the p-value from which we determine significance is affected by sample size. Therefore, the word ‘significant’ is meaningless when referring to a p-value.

- The null hypothesis is never true. If the p-value is greater than .05 then we can decide to reject the alternative hypothesis, but this is not the same thing as the null hypothesis being true: a non-significant result tells us is that the effect is not big enough to be found but it doesn’t tell us that the effect is zero.

- A significant result does not tell us that the null hypothesis is false (see text for details).

- It encourages all or nothing thinking: if p < 0.05 then an effect is significant, but if p > 0.05 it is not. So, a p = 0.0499 is significant but a p = 0.0501 is not, even though these ps differ by only 0.0002.

Task 3.5

What is the difference between a confidence interval and a credible interval?

A 95% confidence interval is set so that before the data are collected there is a long-run probability of 0.95 (or 95%) that the interval will contain the true value of the parameter. This means that in 100 random samples, the intervals will contain the true value in 95 of them but won’t in 5. Once the data are collected, your sample is either one of the 95% that produces an interval containing the true value, or one of the 5% that does not. In other words, having collected the data, the probability of the interval containing the true value of the parameter is either 0 (it does not contain it) or 1 (it does contain it), but you do not know which. A credible interval is different in that it reflects the plausible probability that the interval contains the true value. For example, a 95% credible interval has a plausible 0.95 probability of containing the true value.

Task 3.6

What is a meta-analysis?

Meta-analysis is where effect sizes from different studies testing the same hypothesis are combined to get a better estimate of the size of the effect in the population.

Task 3.7

Describe what you understand by the term Bayes factor.

The Bayes factor is the ratio of the probability of the data given the alternative hypothesis to that of the data given the null hypothesis. A Bayes factor less than 1 supports the null hypothesis (it suggests the data are more likely given the null hypothesis than the alternative hypothesis); conversely, a Bayes factor greater than 1 suggests that the observed data are more likely given the alternative hypothesis than the null. Values between 1 and 3 are considered evidence for the alternative hypothesis that is ‘barely worth mentioning’, values between 3 and 10 are considered to indicate evidence for the alternative hypothesis that ‘has substance’, and values greater than 10 are strong evidence for the alternative hypothesis.

Task 3.8

Various studies have shown that students who use laptops in class often do worse on their modules (Payne-Carter, Greenberg, & Walker, 2016; Sana, Weston, & Cepeda, 2013). Table 3.3 (reproduced in in Table 8) shows some fabricated data that mimics what has been found. What is the odds ratio for passing the exam if the student uses a laptop in class compared to if they don’t?

| Laptop | No Laptop | Sum | |

|---|---|---|---|

| Pass | 24 | 49 | 73 |

| Fail | 16 | 11 | 27 |

| Sum | 40 | 60 | 100 |

First we compute the odds of passing when a laptop is used in class:

\[ \begin{aligned} \text{Odds}_{\text{pass when laptop is used}} &= \frac{\text{Number of laptop users passing exam}}{\text{Number of laptop users failing exam}} \\ &= \frac{24}{16} \\ &= 1.5 \end{aligned} \]

Next we compute the odds of passing when a laptop is not used in class:

\[ \begin{aligned} \text{Odds}_{\text{pass when laptop is not used}} &= \frac{\text{Number of students without laptops passing exam}}{\text{Number of students without laptops failing exam}} \\ &= \frac{49}{11} \\ &= 4.45 \end{aligned} \]

The odds ratio is the ratio of the two odds that we have just computed:

\[ \begin{aligned} \text{Odds Ratio} &= \frac{\text{Odds}_{\text{pass when laptop is used}}}{\text{Odds}_{\text{pass when laptop is not used}}} \\ &= \frac{1.5}{4.45} \\ &= 0.34 \end{aligned} \]

The odds of passing when using a laptop are 0.34 times those when a laptop is not used. If we take the reciprocal of this, we could say that the odds of passing when not using a laptop are 2.97 times those when a laptop is used.

Task 3.9

From the data in Table 3.1 (reproduced in Table 8) what is the conditional probability that someone used a laptop given that they passed the exam, p(laptop|pass). What is the conditional probability of that someone didn’t use a laptop in class given they passed the exam, p(no laptop |pass)?

The conditional probability that someone used a laptop given they passed the exam is 0.33, or a 33% chance:

\[ p(\text{laptop|pass})=\frac{p(\text{laptop ∩ pass})}{p(\text{pass})}=\frac{{24}/{100}}{{73}/{100}}=\frac{0.24}{0.73}=0.33 \]

The conditional probability that someone didn’t use a laptop in class given they passed the exam is 0.67 or a 67% chance.

\[ p(\text{no laptop|pass})=\frac{p(\text{no laptop ∩ pass})}{p(\text{pass})}=\frac{{49}/{100}}{{73}/{100}}=\frac{0.49}{0.73}=0.67 \]

Task 3.10

Using the data in Table 3.1 (reproduced in Table 8), what are the posterior odds of someone using a laptop in class (compared to not using one) given that they passed the exam?

The posterior odds are the ratio of the posterior probability for one hypothesis to another. In this example it would be the ratio of the probability that a used a laptop given that they passed (which we have already calculated above to be 0.33) to the probability that they did not use a laptop in class given that they passed (which we have already calculated above to be 0.67). The value turns out to be 0.49, which means that the probability that someone used a laptop in class if they passed the exam is about half of the probability that someone didn’t use a laptop in class given that they passed the exam.

\[ \text{posterior odds}= \frac{p(\text{hypothesis 1|data})}{p(\text{hypothesis 2|data})} = \frac{p(\text{laptop|pass})}{p(\text{no laptop| pass})} = \frac{0.33}{0.67} = 0.49 \]

Chapter 4

Task 4.1

No answer required.

Task 4.2

What are these icons shortcuts to:

-

: This icon enables R syntax mode, which shows R syntax for the analysis, useful for sharing which options you used in the analysis.

-

: This icon lets you edit the analysis name, useful for organizing a .jasp file with multiple analyses.

: This icon lets you edit the analysis name, useful for organizing a .jasp file with multiple analyses. -

: This icon lets you duplicate the analysis, useful for rerunning an analysis, but with some small changes to its options.

-

: This icon opens the JASP help files, which describe the input and output elements for the analysis.

: This icon opens the JASP help files, which describe the input and output elements for the analysis. -

: This icon deletes the analysis.

: This icon deletes the analysis.

Task 4.3

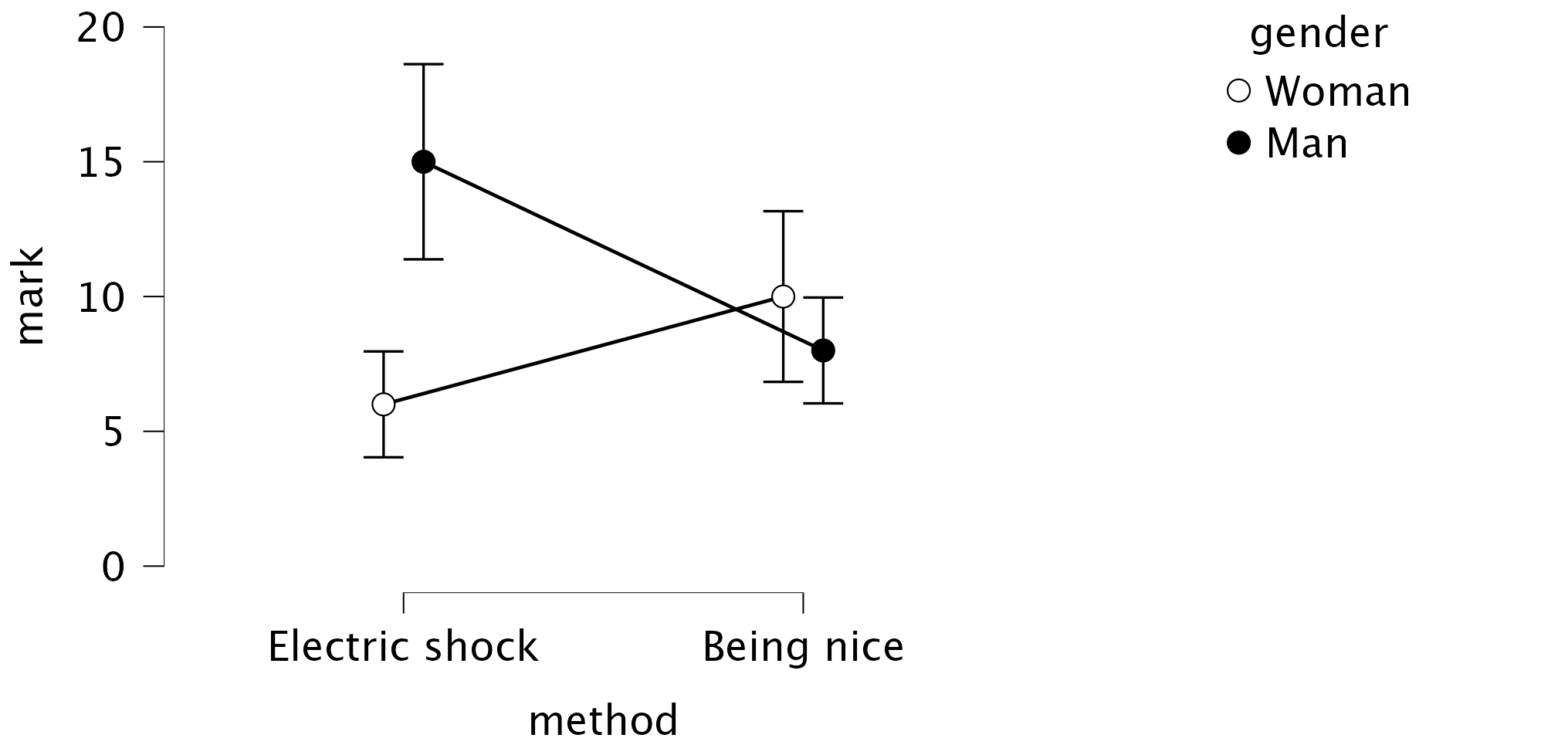

The data below show the score (out of 20) for 20 different students, some of whom are male and some female, and some of whom were taught using positive reinforcement (being nice) and others who were taught using punishment (electric shock). Enter these data into JASP and save the file as

teachin.jasp. (Clue: the data should not be entered in the same way that they are laid out below.)

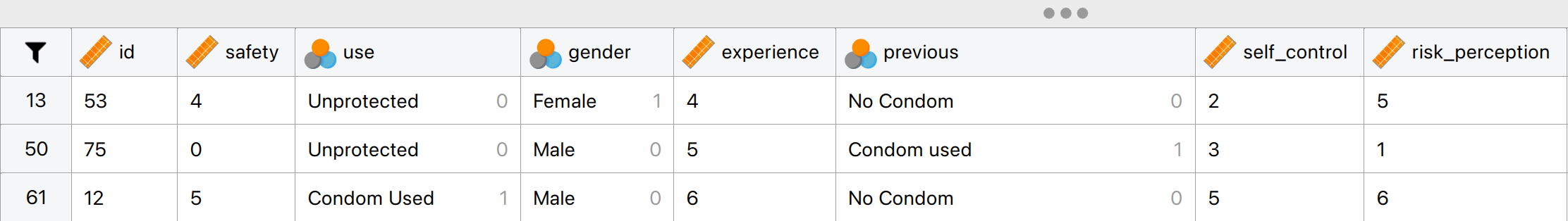

The data can be found in the file teach_method.jasp and the first four rows should look like this:

Task 4.4

Thinking back to Labcoat Leni’s Real Research 3.1, Oxoby (2008) also measured the minimum acceptable offer; these MAOs (in dollars) are below (again, these are approximations based on the plots in the paper). Enter these data into JASP and save this file as

acdc.jasp.

- Bon Scott group: 2, 3, 3, 3, 3, 4, 4, 4, 4, 4, 4, 4, 4, 5, 5, 5, 5, 5

- Brian Johnson group: 0, 1, 2, 2, 3, 3, 3, 3, 3, 4, 4, 4, 4, 4, 4, 4, 4, 1

The data can be found in the file acdc.jasp and the first four rows should look like this:

Task 4.5

According to some highly unscientific research done by a UK department store chain and reported in Marie Clare magazine https://tinyurl.com/mcsgh shopping is good for you: they found that the average women spends 150 minutes and walks 2.6 miles when she shops, burning off around 385 calories. In contrast, men spend only about 50 minutes shopping, covering 1.5 miles. This was based on strapping a pedometer on a mere 10 participants. Although I don’t have the actual data, some simulated data based on these means are below. Enter these data into JASP and save them as

shopping.jasp.

The data can be found in the file shopping.jasp.

Task 4.6

I wondered whether a fish or cat made a better pet. I found some people who had either fish or cats as pets and measured their life satisfaction and how much they like animals. Enter these data into JASP and save as

pets.jasp.

The data can be found in the file pets.jasp.

Task 4.7

One of my favourite activities, especially when trying to do brain-melting things like writing statistics books, is drinking tea. I am English, after all. Fortunately, tea improves your cognitive function, well, in older Chinese people at any rate (Feng et al., 2010). I may not be Chinese and I’m not that old, but I nevertheless enjoy the idea that tea might help me think. Here’s some data based on Feng et al.’s study that measured the number of cups of tea drunk and cognitive functioning in 15 people. Enter these data in JASP and save the file as

tea_15.jasp.

The data can be found in the file tea_15.jasp.

Task 4.8

Statistics and maths anxiety are common and affect people’s performance on maths and stats assignments; women in particular can lack confidence in mathematics (Field, 2010, 2014). Zhang et al. (2013) did an intriguing study in which students completed a maths test in which some put their own name on the test booklet, whereas others were given a booklet that already had either a male or female name on. Participants in the latter two conditions were told that they would use this other person’s name for the purpose of the test. Women who completed the test using a different name performed better than those who completed the test using their own name. (There were no such effects for men.) The data below are a random subsample of Zhang et al.’s data. Enter them into JASP and save the file as

zhang_sample.jasp

The correct format is as in the file zhang_sample.jasp.

Task 4.9

What is a nominal variable?

A nominal variable is a type of categorical variable where the categories (i.e., levels) have no inherent order or ranking. They are simply labels used to distinguish different groups. Examples of nominal variables are Eye-color (levels: brown/blue/green) and Types of Pet (levels: dog/cat/bird/fish).

Task 4.10

What is the difference between wide and long format data?

Long format data are arranged such that scores on an outcome variable appear in a single column and rows represent a combination of the attributes of those scores (for example, the entity from which the scores came, when the score was recorded etc.). In long format data, scores from a single entity can appear over multiple rows where each row represents a combination of the attributes of the score (e.g., levels of an independent variable or time point at which the score was recorded etc.). In contrast, wide format data are arranged such that scores from a single entity appear in a single row and levels of independent or predictor variables are arranged over different columns. As such, in designs with multiple measurements of an outcome variable, for each case the outcome variable scores will be spread across multiple columns with each column containing the score for one level of an independent variable, or for the time point at which the score was observed. Columns can also represent attributes of the score or entity that are fixed over the duration of data collection (e.g., participant sex, employment status etc.).

Chapter 5

Task 5.1

The file

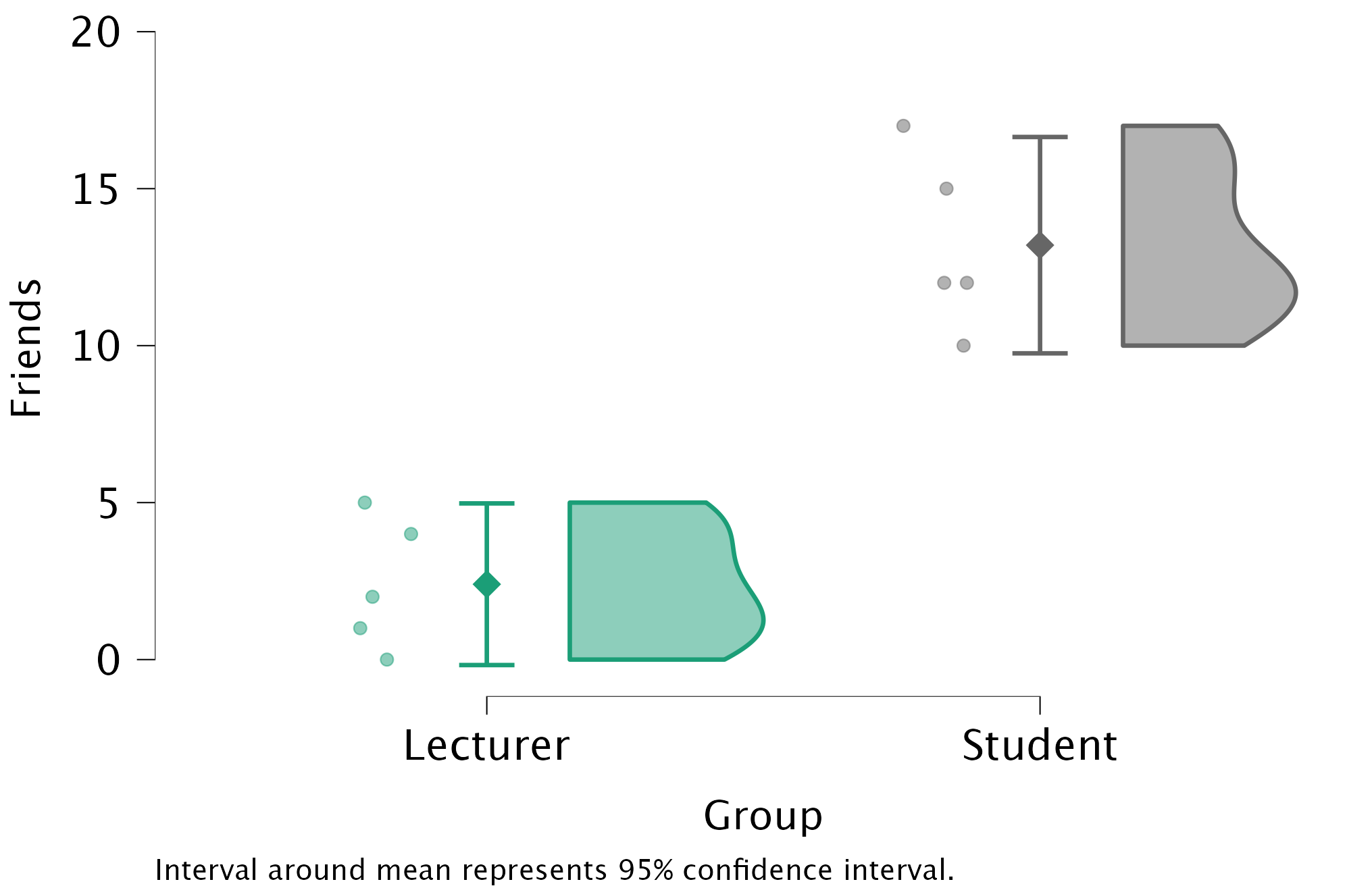

students.jaspcontains data relating to groups of students and lecturers. Using these data plot and interpret a raincloud plot showing the mean number of friends that students and lecturers have.

First of all access the Raincloud plot analysis in the Descriptives Module. Here, specify the variable Friends as the Dependent Variable, while using Group as the Primary Factor. You can then display the Mean and its Confidence Interval by going to the Advanced tab and ticking Mean and Interval around mean.

The resulting raincloud plot will look like this:

We can conclude that, on average, students had more friends than lecturers. The file alex_05_01-06.jasp contains the output and settings discussed above.

Task 5.2

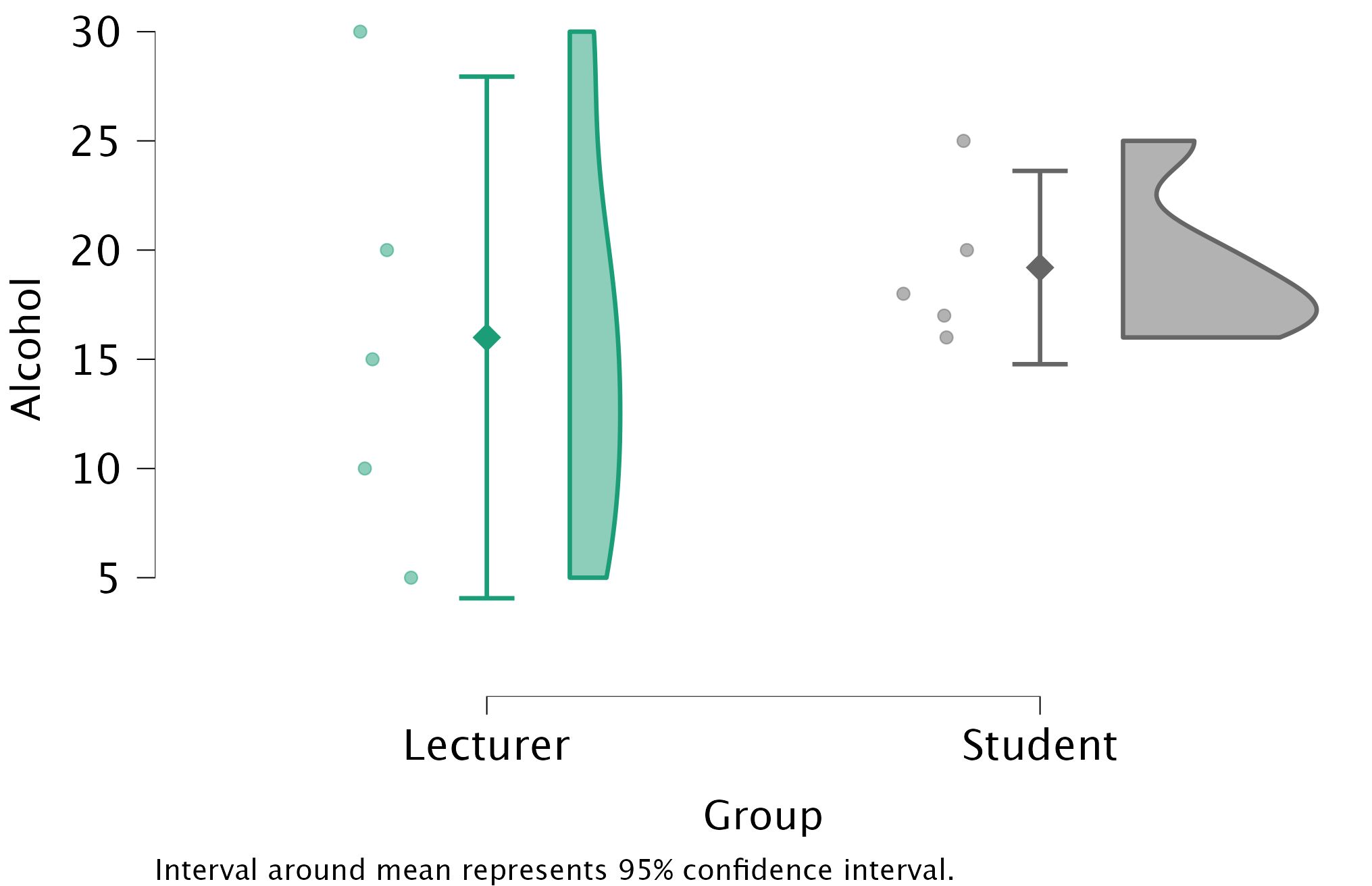

Using the same data, plot and interpret a raincloud plot showing the mean alcohol consumption for students and lecturers.

Follow the same steps as in Task 5.1, but now with alcohol consumption as the dependent variable.

The raincloud plot will look like this:

We can conclude that, on average, students and lecturers drank similar amounts, but the error bars tell us that the mean is a better representation of the population for students than for lecturers (there is more variability in lecturers’ drinking habits compared to students’). The file alex_05_01-06.jasp contains the output and settings discussed above.

Task 5.3

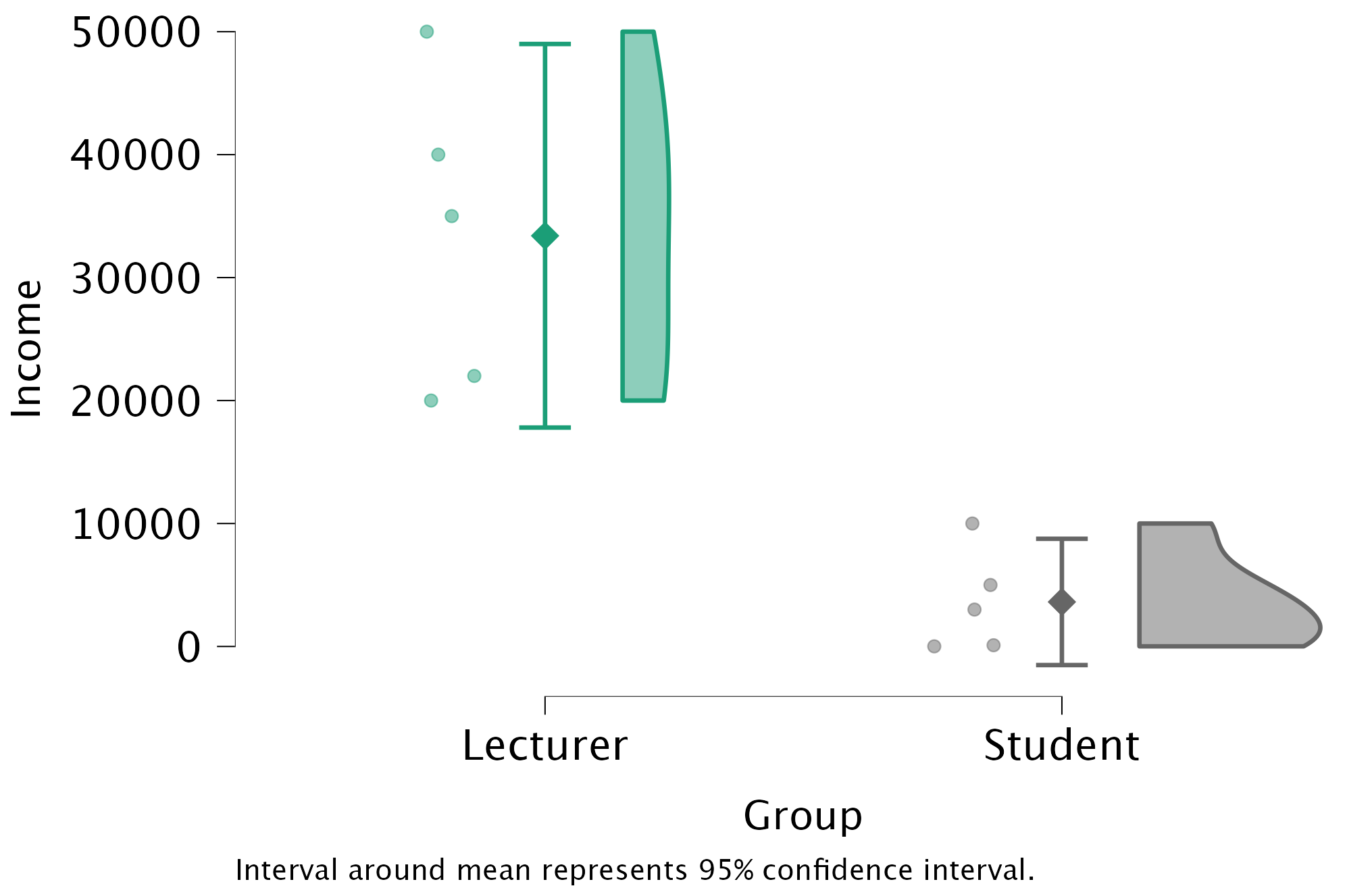

Using the same data, plot and interpret a raincloud plot showing the mean income for students and lecturers.

Follow the same steps as in Task 5.1, but now with income as the dependent variable.

The raincloud plot will look like this:

We can conclude that, on average, students earn less than lecturers, but the error bars tell us that the mean is a better representation of the population for students than for lecturers (there is more variability in lecturers’ income compared to students’). The file alex_05_01-06.jasp contains the output and settings discussed above.

Task 5.4

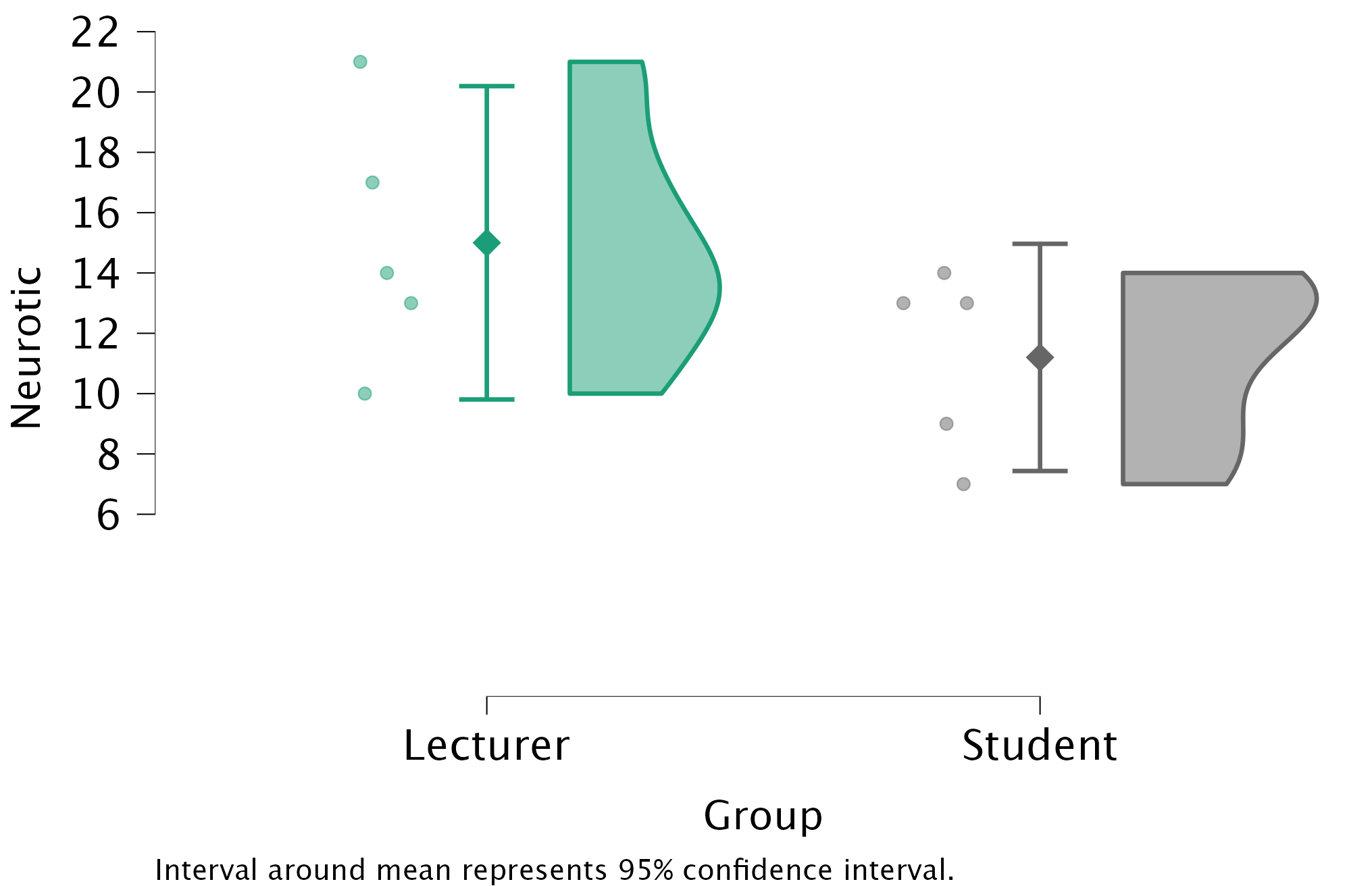

Using the same data, plot and interpret a raincloud plot showing the mean neuroticism for students and lecturers.

Follow the same steps as in Task 5.1, but now with neuroticism as the dependent variable. The raincloud plot will look like this:

We can conclude that, on average, students are slightly less neurotic than lecturers. The file alex_05_01-06.jasp contains the output and settings discussed above.

Task 5.5

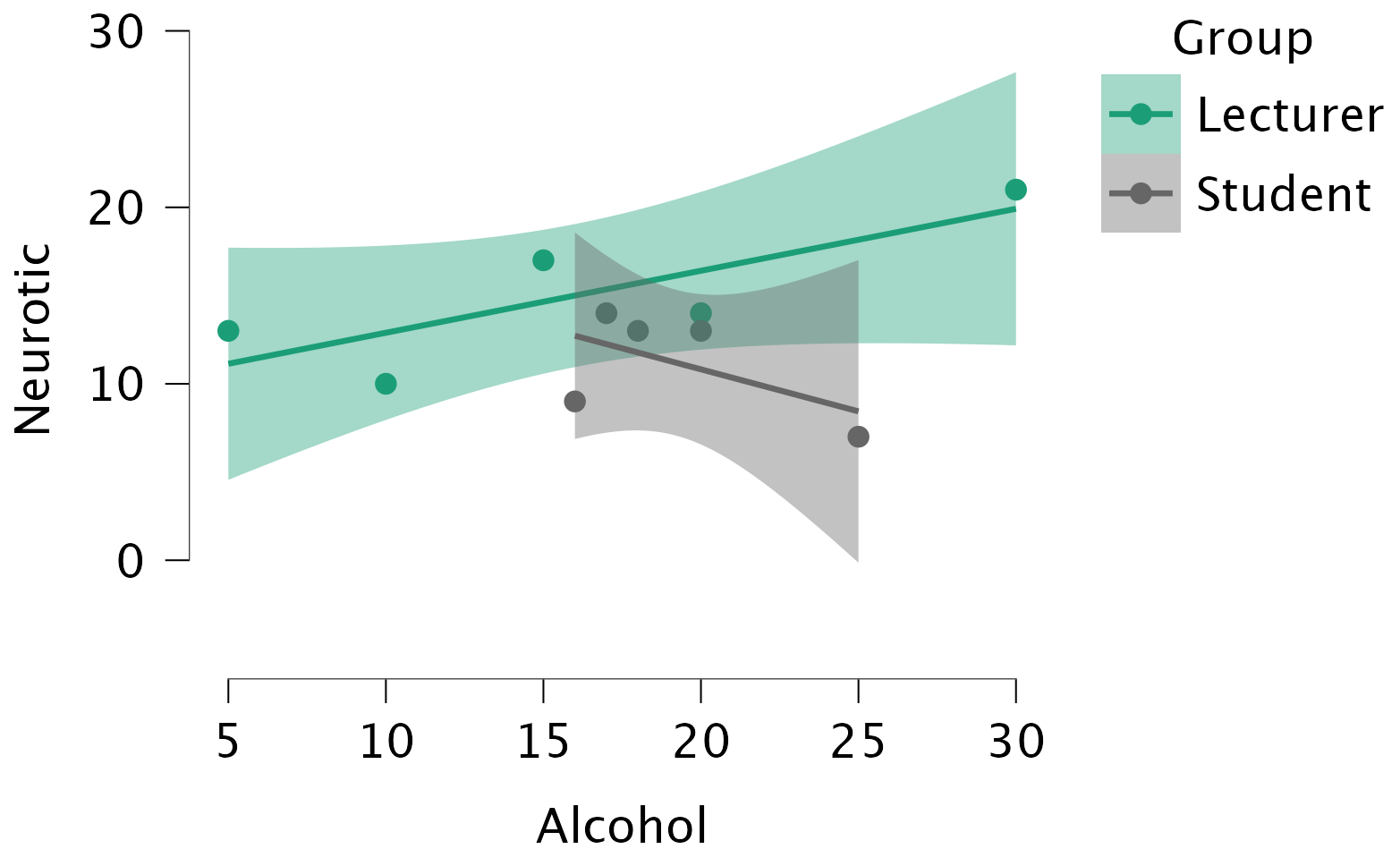

Using the same data, plot and interpret a scatterplot with regression lines of alcohol consumption and neuroticism grouped by lecturer/student.

Go to Descriptives \(\rightarrow\) Descriptive Statistics and drag Alcohol and Neurotic into the Variables box (whichever variable you drag there first will end up on the \(x\)-axis. To get a scatterplot with split lines later, specify the grouping variable (lecturers or students) as the Split variable. Next, go to the Customizable Plots tab and tick the box Scatter plots to produce the following plot:

We can conclude that for lecturers, as neuroticism increases so does alcohol consumption (a positive relationship), but for students the opposite is true, as neuroticism increases alcohol consumption decreases. The file alex_05_01-06.jasp contains the output and settings discussed above.

Task 5.6

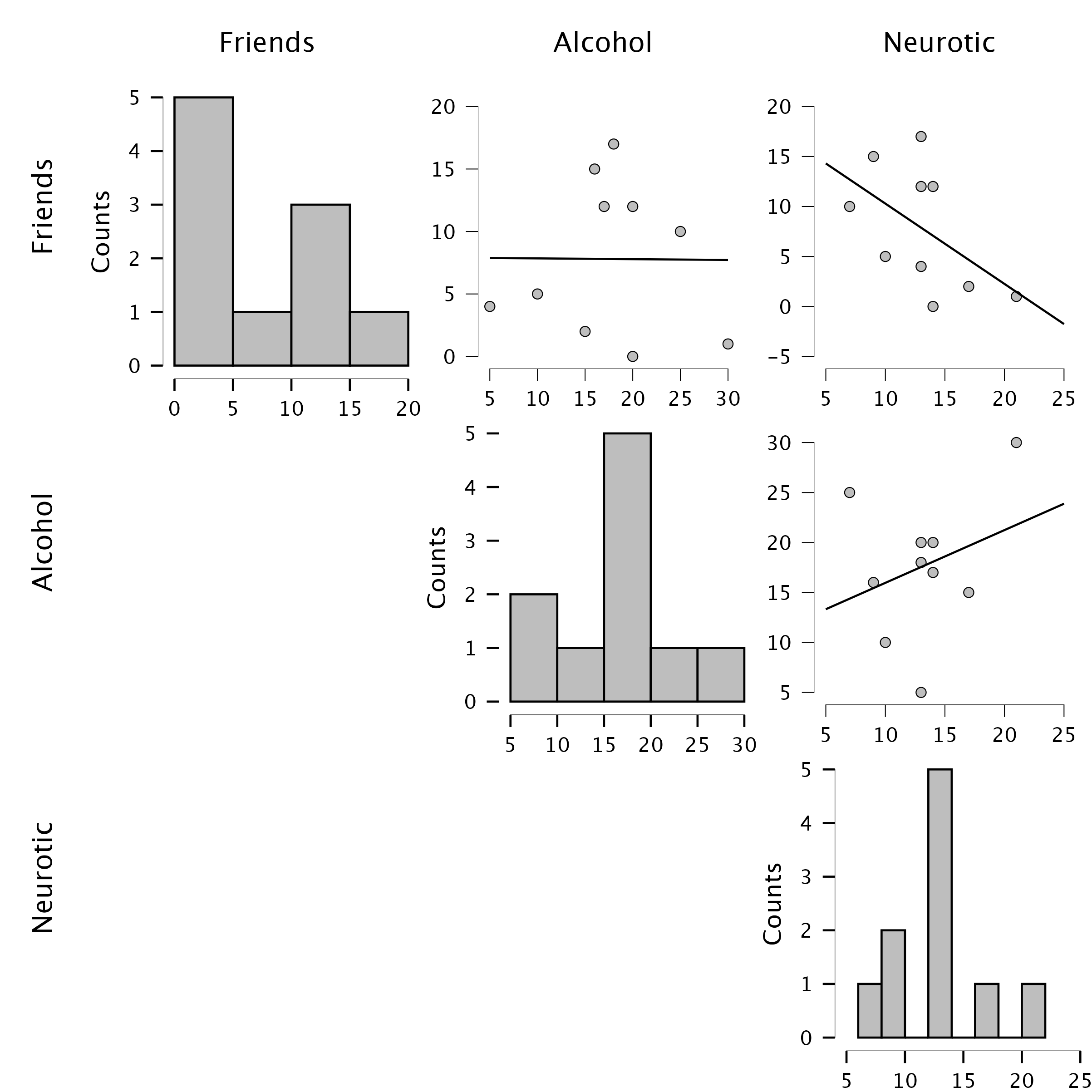

Using the same data, plot and interpret a scatterplot matrix with regression lines of alcohol consumption, neuroticism and number of friends.

Go to Descriptives \(\rightarrow\) Descriptive Statistics and drag Alcohol, Neurotic, and Friends into the Variables box. To get a matrix scatterplot, go to the Basic Plots tab and tick the box Correlation plots to produce the follow plot:

We can conclude that there is no relationship (flat line) between the number of friends and alcohol consumption; there was a negative relationship between how neurotic a person was and their number of friends (line slopes downwards); and there was a slight positive relationship between how neurotic a person was and how much alcohol they drank (line slopes upwards). The file alex_05_01-06.jasp contains the output and settings discussed above.

Task 5.7

Using the

zang_sample.jaspdata from Chapter 4 (Task 8), plot a raincloud plot of the mean test accuracy as a function of the type of name participants completed the test under (x-axis) and whether they identified as man or woman (different coloured rainclouds).

Go to the Raincloud plot analysis in the Descriptives Module. Here, specify the variable accuracy as the Dependent Variable, while using name_type as the Primary Factor and sex as the primary factor. You can then display the Mean and its Confidence Interval by going to the Advanced tab and ticking Mean and Interval around mean.

The plot shows that, on average, males did better on the test than females when using their own name (the control) but also when using a fake female name. However, for participants who did the test under a fake male name, the women did better than males. The file alex_05_07.jasp contains the output and settings discussed above.

Task 5.8

Using the

pets.jaspdata from Chapter 4 (Task 6), plot two raincloud plots comparing scores when having a fish or cat as a pet (x-axis): one for the animal liking variable, and the other for life satisfaction.

Go to the Raincloud plot analysis in the Descriptives Module. Here, specify the variable accuracy as the Dependent Variable, while using name_type as the Primary Factor and sex as the primary factor. You can then display the Mean and its Confidence Interval by going to the Advanced tab and ticking Mean and Interval around mean.

For animal love, the plot shows that the mean love of animals was the same for people with cats and fish as pets. For life satisfaction, the plot shows that, on average, life satisfaction was higher in people who had cats for pets than for those with fish. The file alex_05_08.jasp contains the output and settings discussed above.

Task 5.9

Using the same data as above, plot a scatterplot of animal liking scores against life satisfaction (plot scores for those with fishes and cats in different colours).

Follow the same steps as in Task 5.6, but with animal and life_satisfaction as Variables and pet as the Split variable. The file alex_05_09.jasp contains the plot and settings. We can conclude that as love of animals increases, so does life satisfaction (a positive relationship). This relationship seems to be similar for both types of pets (i.e., both lines have a similar slope).

Task 5.10

Using the

tea_15.jaspdata from Chapter 4 (Task 7), plot a scatterplot showing the number of cups of tea drunk (x-axis) against cognitive functioning (y-axis).

The scatterplot (and near-flat line especially) tells us that there is a tiny relationship (practically zero) between the number of cups of tea drunk per day and cognitive function. The file alex_05_10.jasp contains the plot and settings.

Chapter 6

Task 6.1

Using the

notebook.jaspdata, check the assumptions of normality and homogeneity of variance for the two films (ignoregender). Are the assumptions met?

The results/settings can be found in the file alex_06_01.jasp. The results can be viewed in your browser here.

The Q-Q plots suggest that for both films the expected quantile points are close to those that would be expected from a normal distribution (i.e. the dots fall close to the diagonal line). The descriptive statistics confirm this conclusion. The skewness statistics gives rise to a z-score of \(z_\text{skew} = \frac{−0.302}{0.512} = –0.59\) for The Notebook, and \(z_\text{skew} = \frac{0.04}{0.512} = 0.08\) for a documentary about notebooks. These show no excessive (or significant) skewness. For kurtosis these values are \(z_\text{kurtosis} = \frac{−0.281}{0.992} = –0.28\) for The Notebook, and \(z_\text{kurtosis} = \frac{–1.024}{0.992} = –1.03\) for a documentary about notebooks. None of these z-scores are large enough to concern us. More important the raw values of skew and kurtosis are close enough to zero.

In the Chapter we talk a lot about NOT using significance tests of assumptions, so proceed with caution here. The Shapiro-Wilk test shows no significant deviation from normality for both films. If you chose to ignore my advice and use these sorts of tests then you might assume normality. However, the sample is small and these tests would have been very underpowered to detect a deviation from normal.

Task 6.2

The file

jasp_exam.jaspcontains data on students’ performance on an JASP exam. Four variables were measured:exam(first-year JASP exam scores as a percentage),computer(measure of computer literacy as a percentage),lecture(percentage of JASP lectures attended) andnumeracy(a measure of numerical ability out of 15). There is a variable calleduniindicating whether the student attended Sussex University (where I work) or Duncetown University. Compute and interpret descriptive statistics forexam,computer,lectureandnumeracyfor the sample as a whole.

The results/settings can be found in the file alex_06_02.jasp. The results can be viewed in your browser here.

The output shows the table of descriptive statistics for the four variables in this example. We can put the different variables in rows (rather than columns) by ticking the box Transpose descriptives table. To include the range of the scores, you can tick the box Range in the Statistics tab. Histograms are added by ticking the box Distribution plots in the Basic plots tab.

From the resulting table, we can see that, on average, students attended nearly 60% of lectures, obtained 58% in their JASP exam, scored only 51% on the computer literacy test, and only 5 out of 15 on the numeracy test. In addition, the standard deviation for computer literacy was relatively small compared to that of the percentage of lectures attended and exam scores. The range of scores on the exam was wide (15-99%) as was lecture attendence (8-100%).

Descriptive statistics and histograms are a good way of getting an instant picture of the distribution of your data. This snapshot can be very useful:

- The exam scores (

exam) look suspiciously bimodal (there are two peaks, indicative of two modes). The bimodal distribution of JASP exam scores alerts us to a trend that students are typically either very good at statistics or struggle with it (there are relatively few who fall in between these extremes). Intuitively, this finding fits with the nature of the subject: once everything falls into place it’s possible to do very well on statistics modules, but before that enlightenment occurs it all seems hopelessly difficult! - The numeracy test (

numeracy) has produced very positively skewed data (the majority of people did very badly on this test and only a few did well). This corresponds to what the skewness statistic indicated. - Lecture attendance (

lectures) looks relatively normally distributed. There is a slight negative skew suggesting that although most students attend at least 40% of lectures there is a small tail of students whop attend very few lectures. These students might have disengaged from the module and perhaps need some help to get back on track. - Computer literacy (

computer) is fairly normally distributed. A few people are very good with computers and a few are very bad, but the majority of people have a similar degree of knowledge).

Task 6.3

Calculate and interpret the z-scores for skewness for all variables.

\[ \begin{aligned} z_{\text{skew, jasp}} &= \frac{−0.107}{0.241} = −0.44 \\ z_{\text{skew, numeracy}} &= \frac{0.961}{0.241} = 3.99 \\ z_{\text{skew, computer literacy}} &= \frac{-0.174}{0.241} = −0.72 \\ z_{\text{skew, attendance}} &= \frac{−0.422}{0.241} = −1.75 \\ \end{aligned} \]

It is pretty clear that the numeracy scores are quite positively skewed because they have a z-score that is unusually high (nearly 4 standard deviations above the expected value of 0). This skew indicates a pile-up of scores on the left of the distribution (so most students got low scores). For the other three variables, the z-scores fall within reasonable limits although (as we saw before, attendance is quite negatively skewed suggesting some students have disengaged from their statistics module.)

Task 6.4

Calculate and interpret the z-scores for kurtosis for all variables.

\[ \begin{aligned} z_{\text{kurtosis, jasp}} &= \frac{−1.105}{0.478} = −2.31 \\ z_{\text{kurtosis, numeracy}} &= \frac{0.946}{0.478} = 1.98 \\ z_{\text{kurtosis, computer literacy}} &= \frac{0.364}{0.478} = 0.76 \\ z_{\text{kurtosis, attendance}} &= \frac{-0.179}{0.478} = −0.37 \\ \end{aligned} \]

- The JASP scores have negative excess kurtosis and the distribution is so-called platykurtic. In practical terms this means that there are fewer extreme scores than expected in the the distribution (the tails of the distribution are said to be thin/light because there are fewer scores than expected in them).

- The numeracy scores have positive excess kurtosis and the distribution is so-called leptokurtic. In practical terms this means that there are more extreme scores than expected in the the distribution (the tails of the distribution are said to be fat/heavy because there are more scores than expected in them).

- For computer literacy and attendance scores, the levels of excess kurtosis are within reasonable boundaries of what we might expect. In a broad sense we can assume these distributions are approximately mesokurtic.

Task 6.5

Look at and interpret the descriptive statistics for

numeracyandexam, separate for each university.

If we want to obtain separate descriptive statistics for each of the universities, we can specify a Split variable. The results/settings can be found in the file alex_06_05.jasp. The results can be viewed in your browser here.

The output table now contains output separately for each university. From this table it is clear that Sussex students scored higher on both their JASP exam and the numeracy test than their Duncetown counterparts. Looking at the means, on average Sussex students scored an amazing 36% more on the JASP exam than Duncetown students, and had higher numeracy scores too (what can I say, my students are the best).

The histograms of these variables split according to the university attended show numerous things. The first interesting thing to note is that for exam marks, the distributions are both fairly normal. This seems odd because the overall distribution was bimodal. However, it starts to make sense when you consider that for Duncetown the distribution is centred around a mark of about 40%, but for Sussex the distribution is centred around a mark of about 76%. This illustrates how important it is to look at distributions within groups. If we were interested in comparing Duncetown to Sussex it wouldn’t matter that overall the distribution of scores was bimodal; all that’s important is that residuals within each group are from a normal distribution, and in this case it appears to be true. When the two samples are combined, these two normal distributions create a bimodal one (one of the modes being around the centre of the Duncetown distribution, and the other being around the centre of the Sussex data).

For numeracy scores, the distribution is slightly positively skewed (there is a larger concentration at the lower end of scores) in both the Duncetown and Sussex groups. Therefore, the overall positive skew observed before is due to the mixture of universities.

Task 6.6

Repeat Task 5 but for the computer

literacyand percentage of lectures attended.

The results/settings can be found in the file alex_06_06.jasp. The results can be viewed in your browser here.

The JASP output is again split for each university separately. From these tables it is clear that Sussex and Duncetown students scored similarly on computer literacy (both means are very similar). Sussex students attended slightly more lectures (63.27%) than their Duncetown counterparts (56.26%). The histograms are also split according to the university attended. All of the distributions look fairly normal. The only exception is the computer literacy scores for the Sussex students. This is a fairly flat distribution apart from a huge peak between 50 and 60%. It’s slightly heavy-tailed (right at the very ends of the curve the bars come above the line) and very pointy. This suggests positive kurtosis. If you examine the values of kurtosis you will find extreme positive kurtosis as indicated by a value that is more than 2 standard deviations from 0 (i.e. no excess kurtosis), \(z = \frac{1.38}{0.662} = 2.08\).

Task 6.7

Conduct and interpret a Shapiro-Wilk test for

numeracyandexam.

The correct response to this task should be “but you told me never to do a Shapiro-Wilk test”.

The Shapiro-Wilk (S-W) test can be accessed in the Descriptives analysis.

The results/settings can be found in the file alex_06_07.jasp. The results can be viewed in your browser here.

For JASP exam scores, the S-W test is significant, S-W = 0.96, p = 0.005, and this is true also for numeracy scores, S-W = 0.92, p < .001. These tests indicate that both distributions are significantly different from normal. This result is likely to reflect the bimodal distribution found for exam scores, and the positively skewed distribution observed in the numeracy scores. However, these tests confirm that these deviations were significant (but bear in mind that the sample is fairly big.)

As a final point, bear in mind that when we looked at the exam scores for separate groups, the distributions seemed quite normal; now if we’d asked for separate tests for the two universities (by dragging uni in the Split box) the S-W test will have been different. You can see this in the second analysis that’s listed in the .jasp file.

Note that the percentages on the JASP exam are not significantly different from normal within the two groups. This point is important because if our analysis involves comparing groups, then what’s important is not the overall distribution but the distribution in each group.

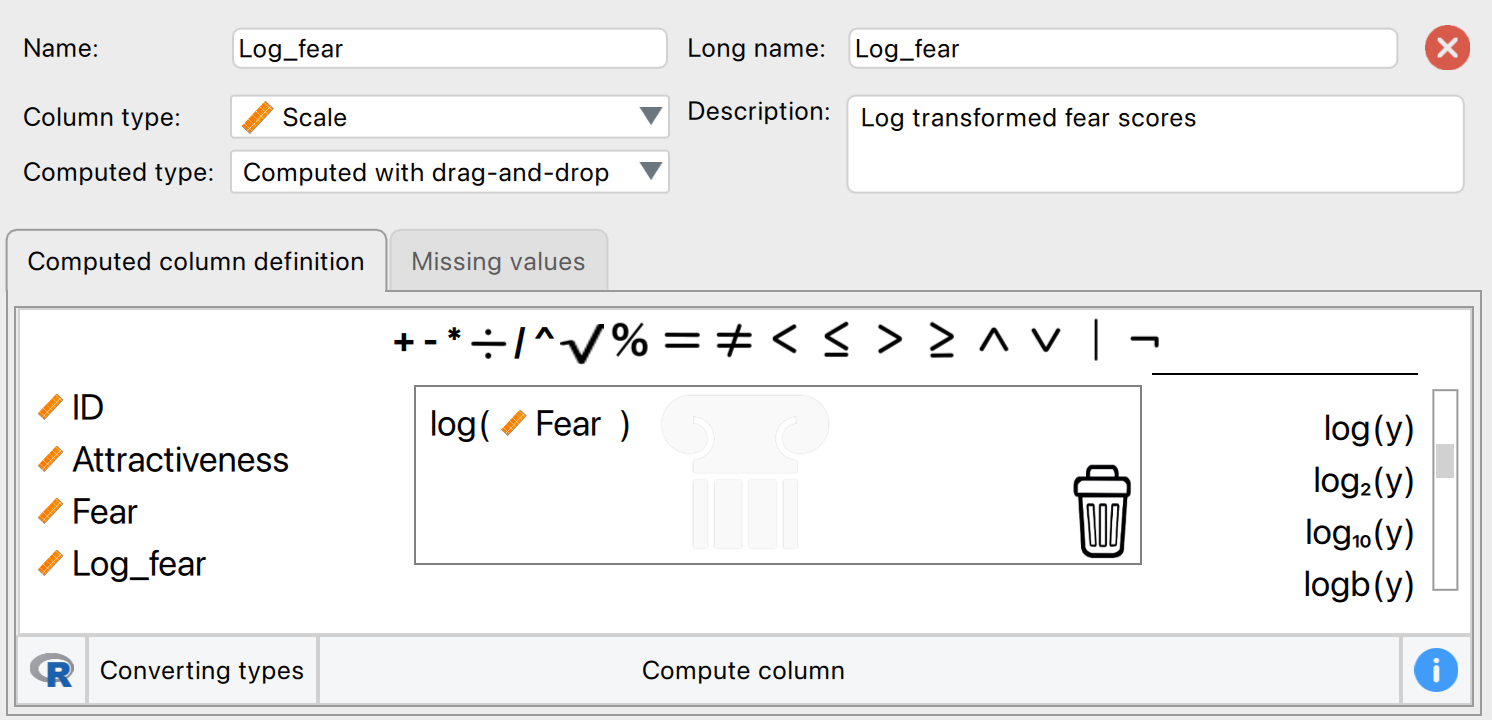

Task 6.9

Transform the

numeracyscores (which are positively skewed) using one of the transformations described in this chapter. Do the data become normal?

We can achieve these transformations using the Compute column functionality, using either the drag-and-drop mode (see Section Section 6.10.4) or syntax mode. For those of you wanting to use syntax mode, below are three lines of code for transforming numeracy to its natural logarithm, its square root, and its reciprocal.

ln(numeracy) # Natural logarithm

sqrt(numeracy) # Square root

1/numeracy # ReciprocalHaving created these variables, drag numeracy and your three new variables (in my case ln_numeracy, sqrt_numeracy, and recip_numeracy) to the box labelled Variables in the Descriptive Statistics analysis. Be sure to also tick the box Q-Q plots in the Basic plots tab. The results/settings and new variables can be found in the file alex_06_09.jasp. The results can be viewed in your browser here.

For each Q-Q plot we want to compare the distance of the points to the diagonal line to the same distances for the raw scores. For the raw scores, the observed values deviate from normal (the diagonal) at the extremes, but mainly for large observed values (because the distributioon is positively skewed).

- The log transformation improves the distribution a bit The positive skew is mitigated by the log transformation (large scores are made less extreme) resulting in dots on the Q-Q plot that are much closer to the line for large observed values.

- Similarly, the square root transformation mitigates the positive skew too by having a greater effect on large scores. The result is again a Q-Q plot with dots that are much closer to the line for large observed values that for the raw data.

- Conversely, the reciprocal transformation makes things worse! The result is a Q-Q plot with dots that are much further from the line than for the raw data.

Task 6.10

Find out what effect a natural log transformation would have on the four variables measured in

jasp_exam.jasp.

We follow the same steps as in Task 6.9, for transforming variables, but now apply the log() transformation to all four observed variables. The results/settings and new variables can be found in the file alex_06_10.jasp. The results can be viewed in your browser here.

- Numeracy: as a result of the transformation, the data seem somewhat more normally distributed (i.e., points in the Q-Q plot are more alligned along the diagonal).

- Exam: the bimodal distribution we saw before is not magically transformed away, and needs to be dealt with in another way, such as using the Split variable to assess normality for each group separately.

- Computer: the original variable seems to be more normally distributed than the transformed variable. This shows that the log-transformation can also have a detrimental effect!

- Numeracy: here, the transformation also does not seem to have a beneficial effect.

This reiterates my point from the book chapter that transformations are often not a magic solution to problems in the data.

Chapter 7

Task 7.1

A student was interested in whether there was a positive relationship between the time spent doing an essay and the mark received. He got 45 of his friends and timed how long they spent writing an essay (hours) and the percentage they got in the essay (

essay). He also translated these grades into their degree classifications (grade): in the UK, a student can get a first-class mark (the best), an upper-second-class mark, a lower second, a third, a pass or a fail (the worst). Using the data in the fileessay_marks.jaspfind out what the relationship was between the time spent doing an essay and the eventual mark in terms of percentage and degree class (draw a scatterplot too).

The results/settings can be found in the file alex_07_01.jasp. The results can be viewed in your browser here.

The results indicate that the relationship between time spent writing an essay and grade awarded was not significant, Pearson’s r = 0.27, 95% BCa CI [-0.018, 0.506], p = 0.077. Note that I conducted a two-tailed test here, which is better when you want to include a confidence interval. To test a one-tailed alternative hypothesis, you can change the option Alt. Hypothesis in JASP. In this case, it would yield a p-value that is significant for \(\alpha = 0.05\), and demonstrates why people who like to cheat at science like to change their alternative hypothesis after the results are in (i.e., HARK’ing).

The second part of the question asks us to do the same analysis but when the percentages are recoded into degree classifications. The degree classifications are ordinal data (not interval): they are ordered categories. So we shouldn’t use Pearson’s test statistic, but Spearman’s and Kendall’s ones instead.

In both cases the correlation is non-significant. There was no significant relationship between degree grade classification for an essay and the time spent doing it, \(\rho\) = 0.19, p = 0.204, and \(\tau\) = –0.16, p = 0.178. Note that the direction of the relationship has reversed. This has happened because the essay marks were recoded as 1 (first), 2 (upper second), 3 (lower second), and 4 (third), so high grades were represented by low numbers. This example illustrates one of the benefits of not taking continuous data (like percentages) and transforming them into categorical data: when you do, you lose information and often statistical power!

Task 7.2

Using the

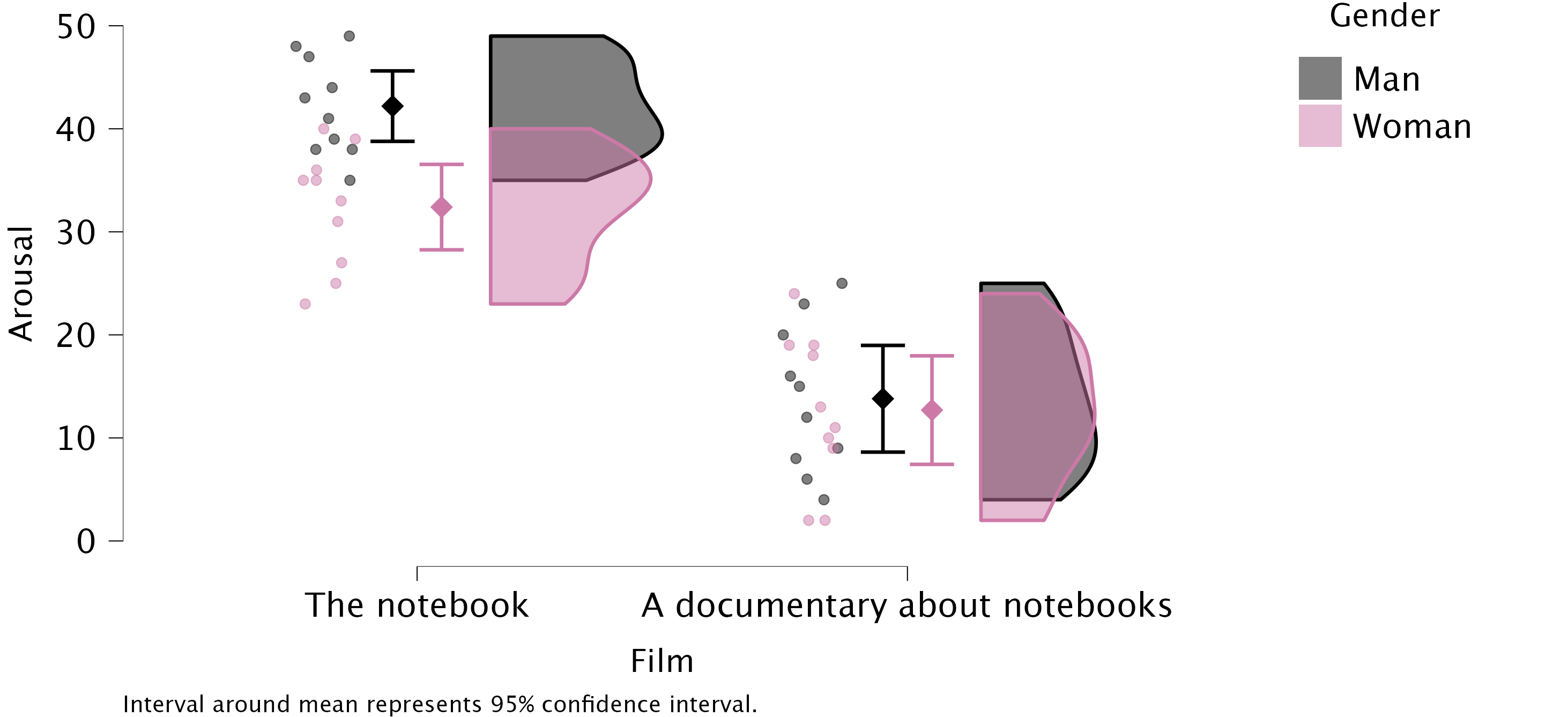

notebook.jaspdata from Chapter 3, quantify the relationship between the participant’s gender and arousal.

The results/settings can be found in the file alex_07_02-03.jasp. The results can be viewed in your browser here.

Gender identity is a categorical variable with two categories, therefore, we need to quantify this relationship using a point-biserial correlation. I used a two-tailed test because one-tailed tests should never really be used. I have also asked for the bootstrapped confidence intervals as they are robust. The results show that there was no significant relationship between biological sex and arousal because the p-value is larger than 0.05 and the bootstrapped confidence intervals cross zero, \(r_\text{pb}\) = –0.20, 95% BCa CI [–0.50, 0.13], p = 0.266.

Task 7.3

Using the notebook data again, quantify the relationship between the film watched and arousal.

The results/settings can be found in the file alex_07_02-03.jasp. The results can be viewed in your browser here.

There was a significant relationship between the film watched and arousal, \(r_\text{pb}\) = –0.87, 95% BCa CI [–0.91, –0.81], p < 0.001. Looking in the data at how the groups were coded, you should see that The Notebook had a code of 1, and the documentary about notebooks had a code of 2, therefore the negative coefficient reflects the fact that as film goes up (changes from 1 to 2) arousal goes down. Put another way, as the film changes from The Notebook to a documentary about notebooks, arousal decreases. So The Notebook gave rise to the greater arousal levels.

Task 7.4

As a statistics lecturer I am interested in the factors that determine whether a student will do well on a statistics course. Imagine I took 25 students and looked at their grades for my statistics course at the end of their first year at university: first, upper second, lower second and third class (see Task 1). I also asked these students what grade they got in their high school maths exams. In the UK GCSEs are school exams taken at age 16 that are graded A, B, C, D, E or F (an A grade is the best). The data for this study are in the file

grades.jasp. To what degree does GCSE maths grade correlate with first-year statistics grade?

The results/settings can be found in the file alex_07_04.jasp. The results can be viewed in your browser here.

Let’s look at these variables. In the UK, GCSEs are school exams taken at age 16 that are graded A, B, C, D, E or F. These grades are categories that have an order of importance (an A grade is better than all of the lower grades). In the UK, a university student can get a first-class mark, an upper second, a lower second, a third, a pass or a fail. These grades are categories, but they have an order to them (an upper second is better than a lower second). When you have categories like these that can be ordered in a meaningful way, the data are said to be ordinal. The data are not interval, because a first-class degree encompasses a 30% range (70–100%), whereas an upper second only covers a 10% range (60–70%). When data have been measured at only the ordinal level they are said to be non-parametric and Pearson’s correlation is not appropriate. Therefore, the Spearman correlation coefficient is used.

In the file, the scores are in two columns: one labelled stats and one labelled gcse. Each of the categories described above has been coded with a numeric value. In both cases, the highest grade (first class or A grade) has been coded with the value 1, with subsequent categories being labelled 2, 3 and so on. Note that for each numeric code I have provided a value label (just like we did for coding variables).

In the question I predicted that better grades in GCSE maths would correlate with better degree grades for my statistics course. This hypothesis is directional and so a one-tailed test could be selected; however, in the chapter I advised against one-tailed tests so I have done two-tailed.

The JASP output shows the Spearman correlation on the variables stats and gcse. The output shows a matrix giving the correlation coefficient between the two variables (0.455), underneath is the significance value of this coefficient (0.022) and then the sample size (25). [Note: it is good to check that the value of N corresponds to the number of observations that were made. If it doesn’t then data may have been excluded for some reason.]